Wan 2.6 vs Sora 2: The 2025 Video AI Showdown

Introduction

The AI video landscape in late 2025 is dominated by two flagship models:

Both generate stunning video, but they’re optimized for very different goals. If you’re asking:

- “Wan 2.6 vs Sora 2 — which one should I use for ads?”

- “Which model is better for long, cinematic worlds?”

- “How do I test both in one place and wire them into my product?”

This guide gives you a practical, production‑oriented comparison — and shows how Atlas Cloud lets you try both models in a playground and integrate them via a single API.

TL;DR Quick Comparison (Specs & Pricing Profile)

Wan 2.6 vs Sora 2 at a Glance

| Wan 2.6 | Sora 2 | |

|---|---|---|

| Price | $0.08/sec on Atlas Cloud | $0.05/sec on Atlas Cloud |

| Core focus | Character control & Story creating | World simulation & Commercial & Cinematic Video |

| Typical duration | 5s; 10s; 15s | 10s; 15s |

| Input types | Text‑to‑Video; Image‑to‑Video; Video ref | Text‑to‑Video; Image‑to‑Video |

| Size | Text-to-video & Video ref: 720*1280; 1280*720; 960*960; 1088*832; 832*1088; 1920*1080; 1080*1920; 1440*1440; 1632*1248; 1248*1632; Image-to-video: According to the size of reference image. | 720*1280; 1280*720 |

| Resolution | 720P, 1080P | —— |

| Strength | Multi‑shot narrative, face stability, cinematic camera paths | Deep physics, complex environments |

| Audio | Narrative & Dialogue | Immersive background soundscapes |

| Best for | Character Animation, Social Media Content, Rapid Ideation | Ads, e‑com, Film making, Professional Production |

| Semantic Extrapolation | Excels in Cinematic Scenes | Excels in Commercial Advertising |

| Shot Composition | Intelligent Prompt Execution | Prompt Adherence |

| Consistency | Character Consistency | Environmental Consistency |

On Atlas Cloud, you can:

- Run the same prompt through Wan 2.6 and Sora 2

- See output quality vs cost side by side

- Decide which model gives the best ROI for your specific workflow

Model Overview

Wan 2.6 in a Nutshell

Wan 2.6 by Alibaba Cloud has groundbreaking multimodal capabilities and native audio sync. This latest Wan 2.6 update empowers creators with advanced text-to-video and image-to-video tools, producing 1080p cinematic content up to 15 seconds long.

Key ideas:

- Smart Segmentation (Multi‑Shot Narrative)

Understands shot boundaries and keeps the same character identity across close‑ups, mediums, and wide shots. Great for ads and storyboards where the hero must stay on‑model.

- 15‑Second High‑Fidelity Clips

Pushes typical video length to ~15 seconds. Enough for a full narrative beat — setup → action → reaction — in a single generation, which maps perfectly to 6–15s ad slots and social hooks.

- High-Fidelity Audio & Stable Multi-Speaker Dialogue

A major leap in native audio generation. Wan 2.6 delivers hyper-realistic vocal timbres and supports stable multi-person dialogue. It creates synchronized, natural-sounding conversations between multiple characters, eliminating the robotic tone often found in AI audio.

- Advanced Video Reference (Ref‑Guided Acting)

You upload a rehearsal video (phone recording), and Wan 2.6 clones timing, blocking, and body language onto a generated character. This gives directors actor‑level control without reshoots.

Overall, Wan 2.6 feels like a comprehensive narrative engine for directors, merging intelligent multi-shot visuals with high-fidelity dialogue to deliver complete, 15-second cinematic storylines.

Sora 2 in a Nutshell

Sora 2 is an advanced video generation model that significantly outperforms prior systems in physical accuracy, realism, and controllability, serving as a powerful engine for world simulation.

Key Ideas

- Unmatched Realism & Physical Simulation

Sora 2 features advanced world simulation capabilities, making generated scenes adhere more strictly to the laws of physics. It excels at delivering high-fidelity visuals across various aesthetics, ranging from hyper-realistic and cinematic footage to distinct anime styles.

- Superior Controllability & Consistency

The system offers unprecedented control, capable of following intricate instructions that span multiple shots. Crucially, it accurately maintains the "world state" (persistence), ensuring that objects, characters, and environments remain consistent throughout complex sequences.

- Fully Synchronized Audio Integration

Moving beyond silent imagery, Sora 2 introduces synchronized dialogue and sound effects. It creates sophisticated background soundscapes, speech, and SFX with a high degree of realism, perfectly matching the on-screen action for a fully immersive experience.

- Real-World Integration

The model bridges the gap between the virtual and the physical by allowing users to directly inject elements of the real world into the generated content.

In conclusion, Sora 2 is a high-fidelity world simulator designed to generate physically consistent, multi-style videos with fully synchronized audio through highly controllable instructions.

Core Differences

Consistency Focus: Characters vs. Worlds

- Wan 2.6: Its strength lies in Character Consistency and Lip Synchronization. It excels at keeping a character's identity stable across frames and matching their mouth movements perfectly to speech.

- Sora 2: Its superpower is Environmental Consistency. It maintains a stable, persistent world state, ensuring that the background, physics, and spatial relationships remain coherent even as the camera moves.

Cinematography & Workflow

The workflow experience differs significantly depending on the use case.

- General Scenes:

- Wan 2.6 (Creating): Works beautifully with simple natural language. You describe the vibe, and it "creates" the scene for you. It relies on generative intuition.

- Sora 2 (Producing): Requires more granular control. You need to act like a director, providing specific camera and shot instructions (e.g., pans, zooms). It feels more like a technical "production" process.

- Commercial Scenes:

- Sora 2: Surprisingly, in commercial contexts, Sora 2 demonstrates high-level Conceptual Inference. It can intelligently generate sophisticated storyboards and shots for ads without needing micromanagement.

Audio Dynamics

- Wan 2.6: Focuses on the narrative. It autonomously designs character dialogue based on the generated persona.

- Sora 2: Focuses on immersion. It generates hyper-realistic environmental audio and background soundscapes based on the physical setting.

Conclusion: Create vs. Produce

Ultimately, the choice comes down to two distinct philosophies:

- Wan 2.6 is for "Creating" Characters: It feels like an intuitive creative partner that prioritizes the actors and their performance.

- Sora 2 is for "Producing" Worlds: It acts as a high-fidelity simulator that prioritizes the physical environment and precise cinematic control.

Use Cases: When/Who to Choose Wan 2.6 or Sora 2

(Same prompt, different outputs)

A useful way to decide is to imagine running the same creative brief through both models and compare the outputs.

Example 1: Cinematic Fantasy Scene

plaintext1Prompt: 2A cinematic sci-fi trailer. Shot 1: Wide shot, a lonely explorer in a battered spacesuit walking across a desolate red Martian desert, a massive derelict spaceship in the distance. Shot 2: Close-up, the explorer stops and wipes dust off their helmet visor, eyes widening in shock. Shot 3: Over-the-shoulder shot, revealing a glowing, bioluminescent blue flower blooming rapidly in front of them. 8k resolution, highly detailed, consistent character.

Output:

- Wan 2.6 Output (Click here to see the output video)

- Consistent actress across angles

- Nice instruction following

- Immersive background soundscapes

- Sora 2 (Click here to see the output video)

- Nice instruction following

- Immersive background soundscapes and dialogue

Example 2: 15‑Second Product Ad

plaintext1Prompt: A YouTuber promoting this AI companion toy in English. 1280*720

Output:

- Wan 2.6 (Click here to see the output video)

- Sora 2 (Click here to see the output video)

- Excellent semantic extrapolation ability in commercial context

- Keep Excellent production Consistency

Example 3: anime style

In this case, you can clearly see how Wan 2.6 advanced in dialogue and Auto-Scene Detection, while Sora 2 in Immersive background soundscapes.

plaintext1Prompt: 2High-quality anime style. A girl wearing a colorful floral Yukata standing on traditional shrine steps at night. She turns back to look at the camera with a gentle smile. Massive, vibrant fireworks explode in the dark sky behind her, illuminating her silhouette. Soft glow from hanging paper lanterns. Fireflies, magical atmosphere.

Output:

- Wan 2.6 (Click here to see the output video)

- Superior AI storyboarding capabilities

- Smooth narrative & natural dialogue

- Sora 2 (Click here to see the output video)

- Immersive background soundscapes

Who should pick which?

- Influencers / casual creators / Those who want flexible video size chasing fast viral content → Wan 2.6

- Professional creators & brands/ e‑commerce needing polish and control → Sora 2

How to Use Both Models on Atlas Cloud

Instead of locking into “Wan 2.6 vs Sora 2,” Atlas Cloud lets you use both models side by side — first in a playground, then via a single API.

Method 1: Use directly in the Atlas Cloud platform

| Wan 2.6 family | Sora 2 family |

| Wan 2.6 text-to-video | Sora 2 text-to-video |

| Wan 2.6 image-to-video | Sora 2 image-to-video |

| Wan 2.6 Ref-video |

Method 2: Access via API

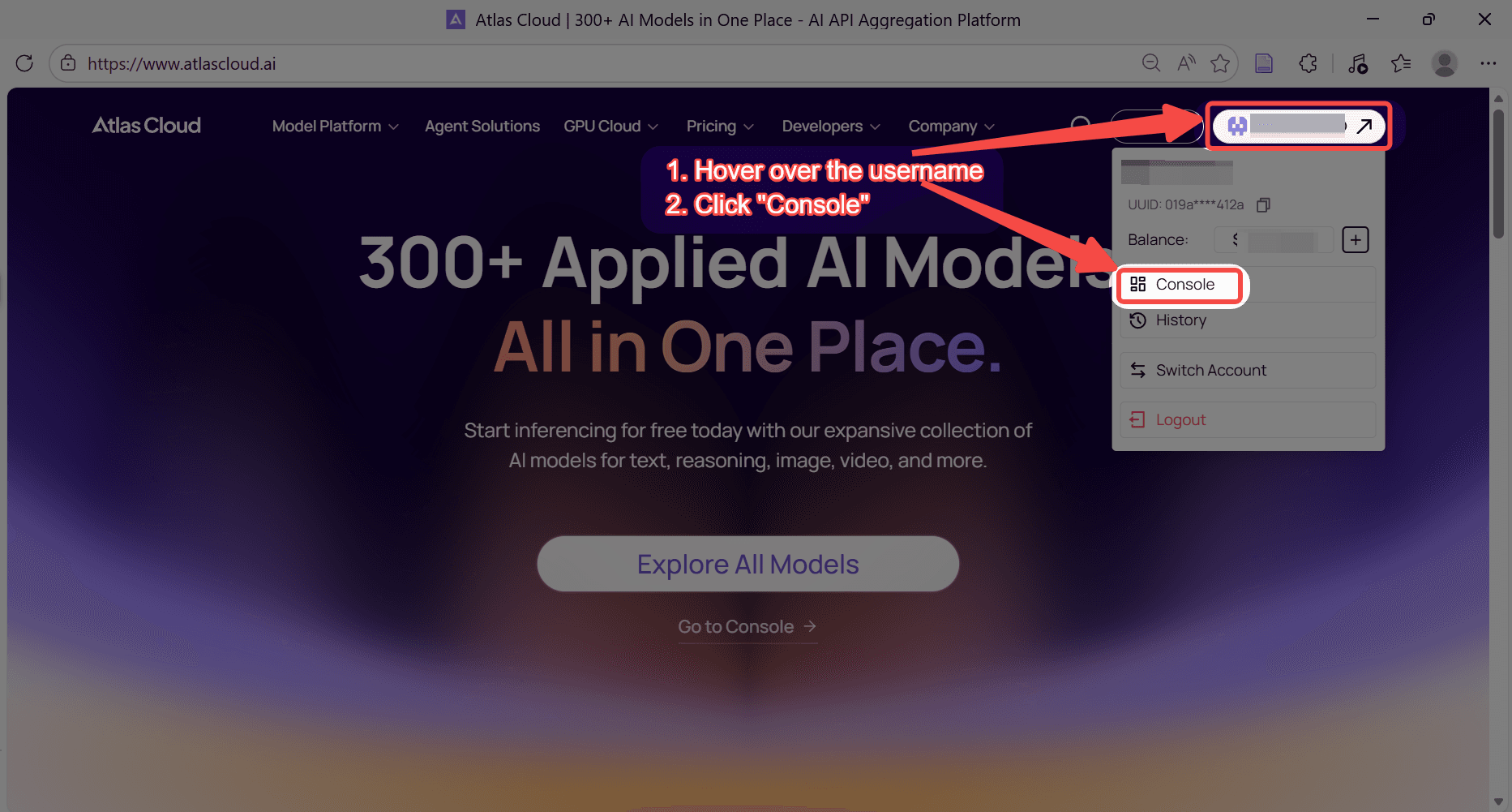

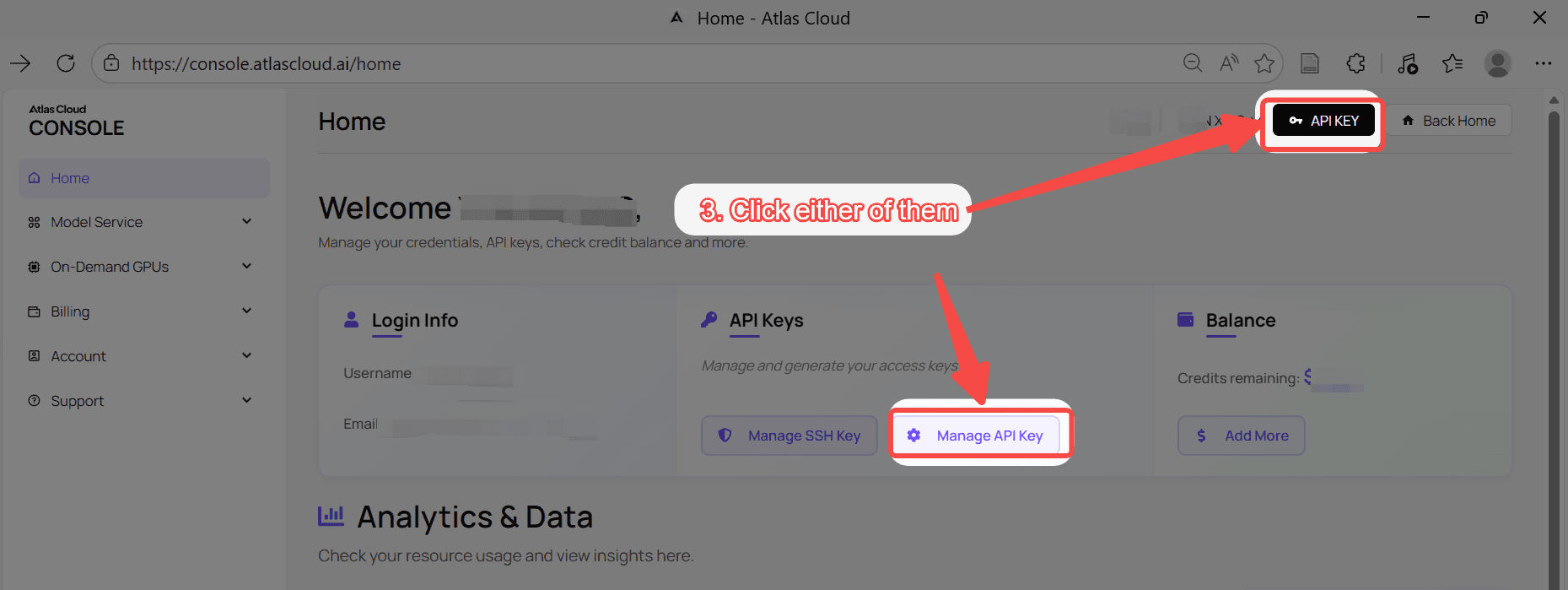

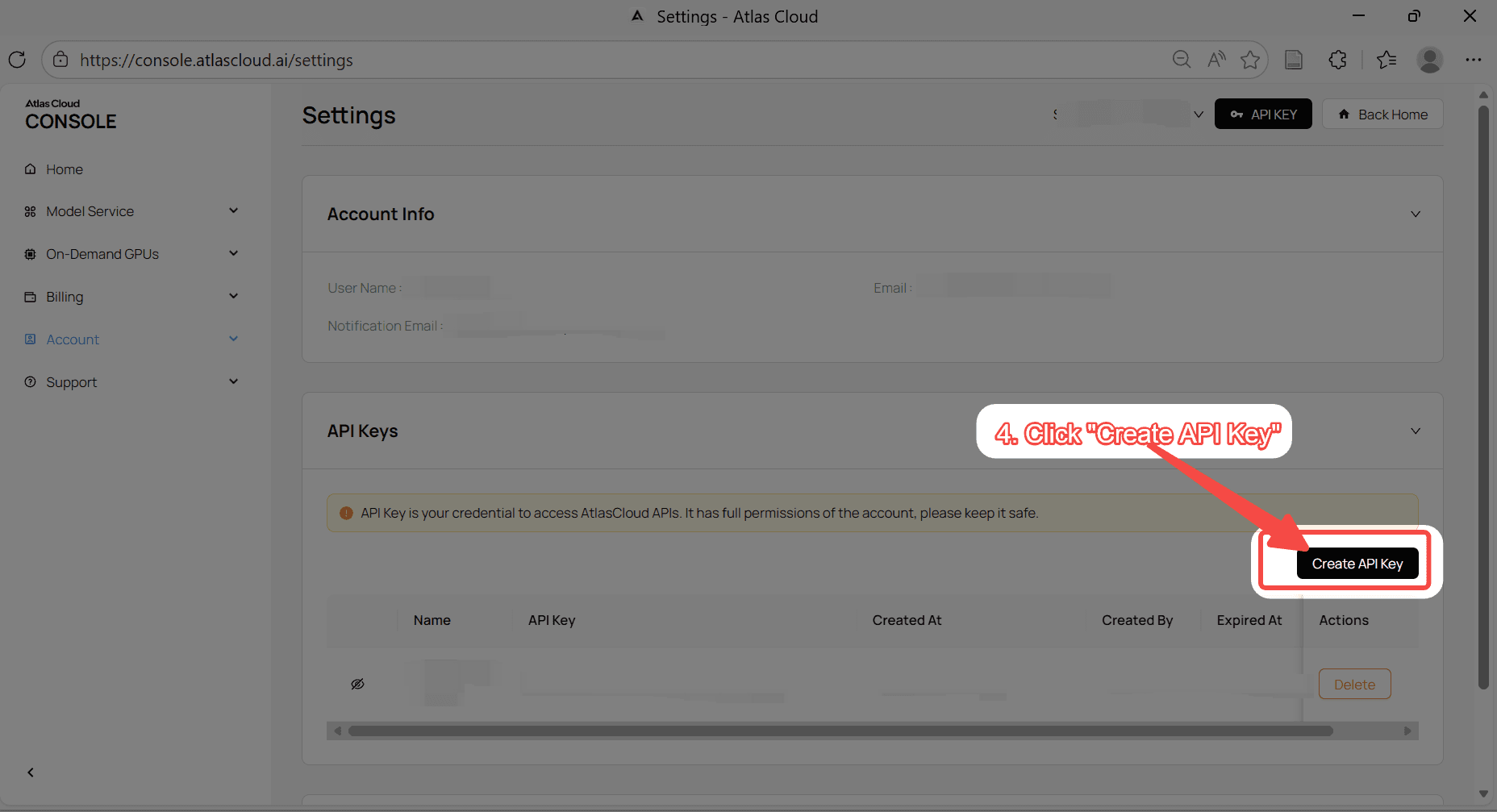

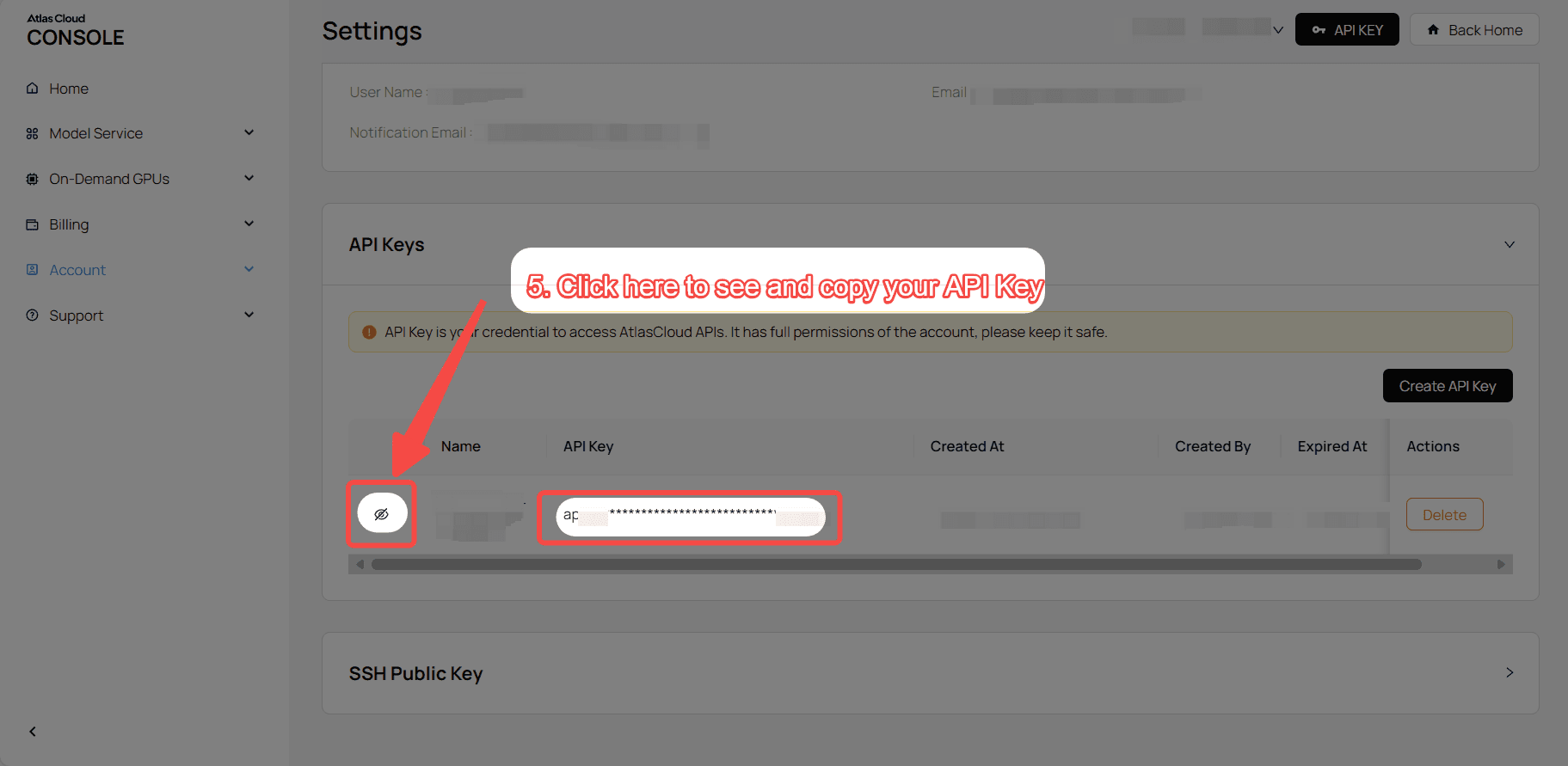

Step 1: Get your API key

Create an API key in your console and copy it for later use.

Step 2: Check the API documentation

Review the endpoint, request parameters, and authentication method in our API docs.

Step 3: Make your first request (Python example)

Example: generate a video with Wan 2.6 (text-to-video).

python1import requests 2import time 3 4# Step 1: Start video generation 5generate_url = "https://api.atlascloud.ai/api/v1/model/generateVideo" 6headers = { 7 "Content-Type": "application/json", 8 "Authorization": "Bearer $ATLASCLOUD_API_KEY" 9} 10data = { 11 "model": "alibaba/wan-2.6/text-to-video", 12 "audio": None, 13 "duration": 15, 14 "enable_prompt_expansion": True, 15 "negative_prompt": "example_value", 16 "prompt": "A cinematic sci-fi trailer. Shot 1: Wide shot, a lonely explorer in a battered spacesuit walking across a desolate red Martian desert, a massive derelict spaceship in the distance. Shot 2: Close-up, the explorer stops and wipes dust off their helmet visor, eyes widening in shock. Shot 3: Over-the-shoulder shot, revealing a glowing, bioluminescent blue flower blooming rapidly in front of them. 8k resolution, highly detailed, consistent character.", 17 "seed": -1, 18 "size": "1920*1080", 19 "shot_type": "multi" 20} 21 22generate_response = requests.post(generate_url, headers=headers, json=data) 23generate_result = generate_response.json() 24prediction_id = generate_result["data"]["id"] 25 26# Step 2: Poll for result 27poll_url = f"https://api.atlascloud.ai/api/v1/model/prediction/{prediction_id}" 28 29def check_status(): 30 while True: 31 response = requests.get(poll_url, headers={"Authorization": "Bearer $ATLASCLOUD_API_KEY"}) 32 result = response.json() 33 34 if result["data"]["status"] in ["completed", "succeeded"]: 35 print("Generated video:", result["data"]["outputs"][0]) 36 return result["data"]["outputs"][0] 37 elif result["data"]["status"] == "failed": 38 raise Exception(result["data"]["error"] or "Generation failed") 39 else: 40 # Still processing, wait 2 seconds 41 time.sleep(2) 42 43video_url = check_status()

FAQ

Q: How does Atlas Cloud help me choose between Wan 2.6 and Sora 2? A: Atlas Cloud allows you to run the exact same prompt through both models simultaneously. You can view the output quality and cost side-by-side to determine which model offers the best Return on Investment (ROI) for your specific workflow.

Q: What is the fundamental difference between the two models? A: The core philosophy differs: Wan 2.6 is for "Creating", acting as an intuitive creative partner focused on characters and narrative performance. Sora 2 is for "Producing", acting as a high-fidelity simulator focused on physical accuracy, environmental consistency, and precise cinematic control.

Q: Which model handles audio better? A: Both support audio, but their focus differs:

- Wan 2.6: Focuses on Narrative. It is excellent for natural-sounding, synchronized dialogue between multiple characters without a robotic tone.

- Sora 2: Focuses on Immersion. It generates hyper-realistic background soundscapes and sound effects (SFX) that perfectly match the on-screen physical action.