Seedance 2.0 Overview

Atlas Cloud is set to welcome a powerful new addition—Seedance 2.0.

- What is Seedance 2.0? Developed by ByteDance, Seedance 2.0 is the latest multimodal video generation model that integrates four input modalities—Image, Video, Audio, and Text— and enables richer expression and far more controllable results, adding a robust new member to the Seedance video models family.

- Core Benefit: It addresses the "uncontrollability" pain point in video generation. Offering precise composition restoration, complex action replication, and high character consistency.

- Status: Coming soon to Atlas Cloud (Expected release: Late this month).

How does it achieve this unprecedented control? Let’s reveal the four core pillars powering Seedance 2.0.

Core Features: Redefining the Video Creation Workflow

Multimodal Reference Capabilities: From "Prompt Guessing" to "Precise Replication"

The highlight of Seedance 2.0 is its "Reference Capability." It supports a mixed input of up to 12 files (images, videos, audio) and accurately understands intent: use an image to define the visual style, a video to specify character action and camera movement, and audio to drive the rhythm.

For creators, this means no more struggling with complex text prompts. Simply upload reference materials, and the model accurately restores composition, character details, and camera language, significantly lowering the barrier to entry while boosting precision.

Extreme Consistency & Stability: Solving the "Character Morphing" Problem

In video generation, inconsistent facial features and flickering scenes are common headaches. Seedance 2.0 significantly enhances the understanding of physical laws and instructions. Whether it's facial features, clothing details, or overall visual style, it maintains high uniformity throughout the clip.

This is crucial for long-form content and brand storytelling, ensuring Character IP continuity and allowing AI video to finally be used for serious narratives and commercial advertisements.

Video Editing & Infinite Extension: Don't Just Generate, "Keep Shooting"

Seedance 2.0 offers powerful editing capabilities, supporting character replacement, and the addition or deletion of content within existing videos. Furthermore, it supports smooth video extension and concatenation based on prompts.

This optimizes post-production workflows. Designers can "reshoot" or "tweak scenes" directly within the model without regenerating the entire clip, saving significant rendering time and computing costs.

Audio-Visual Sync & Beat Matching: The Rhythm Master

The model natively supports audio input and generates visuals based on the rhythm. Whether it’s complex camera movements hitting the beat (BGM) or syncing character lip movements and actions with reference audio, Seedance 2.0 performs with precision.

This makes Music Video (MV) production and rhythmic ad editing incredibly efficient, achieving an automated, high-level fusion of sight and sound.

Use Cases: Commercial Applications from E-Commerce to Filmmaking

These powerful technical features are revolutionizing content production workflows in real-world commercial and creative scenarios.

E-Commerce & Marketing: Ultimate Texture Reproduction & Product Detail

- Context: Showcasing multi-colored products with clear branding and unified texture.

- Output: The video perfectly displays the silky texture of ribbons and model wear effects. The brand logo remains clear, and scene transitions sync perfectly with background audio, delivering high-end commercial quality.

- Click here to see the output.

Narrative Short Films: Precise Control of Emotional Tension & Pacing

- Context: Creating a thrilling action scene requiring precise control over character emotion, interaction, and narrative rhythm.

- Output: The model accurately controls slow and fast motion pacing while maintaining character consistency, vividly acting out the tense atmosphere.

- Click here to see the output.

Creative VFX: Seamless Surreal Fusion of Real Footage & CG

- Context: Creating a popular social media "transformation" effect.

- Output: Achieves a seamless transition from live-action to CG characters. The physics of the wings flapping are natural, and the camera moves fluidly between micro and macro views, delivering strong visual impact.

- Click here to see the output.

Complex Action & Camera Movement: Replicating "One-Take" Cinematic Styles

- Context: Mimicking the ultra-long opening tracking shot style of the series Severance.

- Output: The model perfectly replicates the eerie, fluid "one-take" style of the reference. The camera weaves through different spaces and perspectives while maintaining facial stability. The trajectory is smooth with no tearing or logical breaks, demonstrating immense control over long takes and surreal expression.

- Click here to see the output.

Atlas Cloud: The Best way to Master Seedance 2.0

Atlas Cloud Core Advantages

Cost Efficiency & Extreme Speed

- Competitive Pricing: We provide the most cost-effective generation experience through optimized price-performance ratios.

- One Platform, All Models: Experience different mainstream models without hopping between platforms. Register now to get $1 free credit!

Workflow Integration & API Ecosystem Designed to seamlessly fit into technical development pipelines and support downstream tasks.

- Flexible Workflows: Support parallel collaboration with other generative models in the same environment.

- Actionable Deliverables: Generated results are ready for immediate post-processing or secondary editing.

- Full API Access: Provides standard developer interfaces for business process automation and deep application integration.

How to Use Seedance 2.0 on Atlas Cloud

Atlas Cloud lets you use models side by side — first in a playground, then via a single API.

Method 1: Use directly in the Atlas Cloud playground

Method 2: Access via API

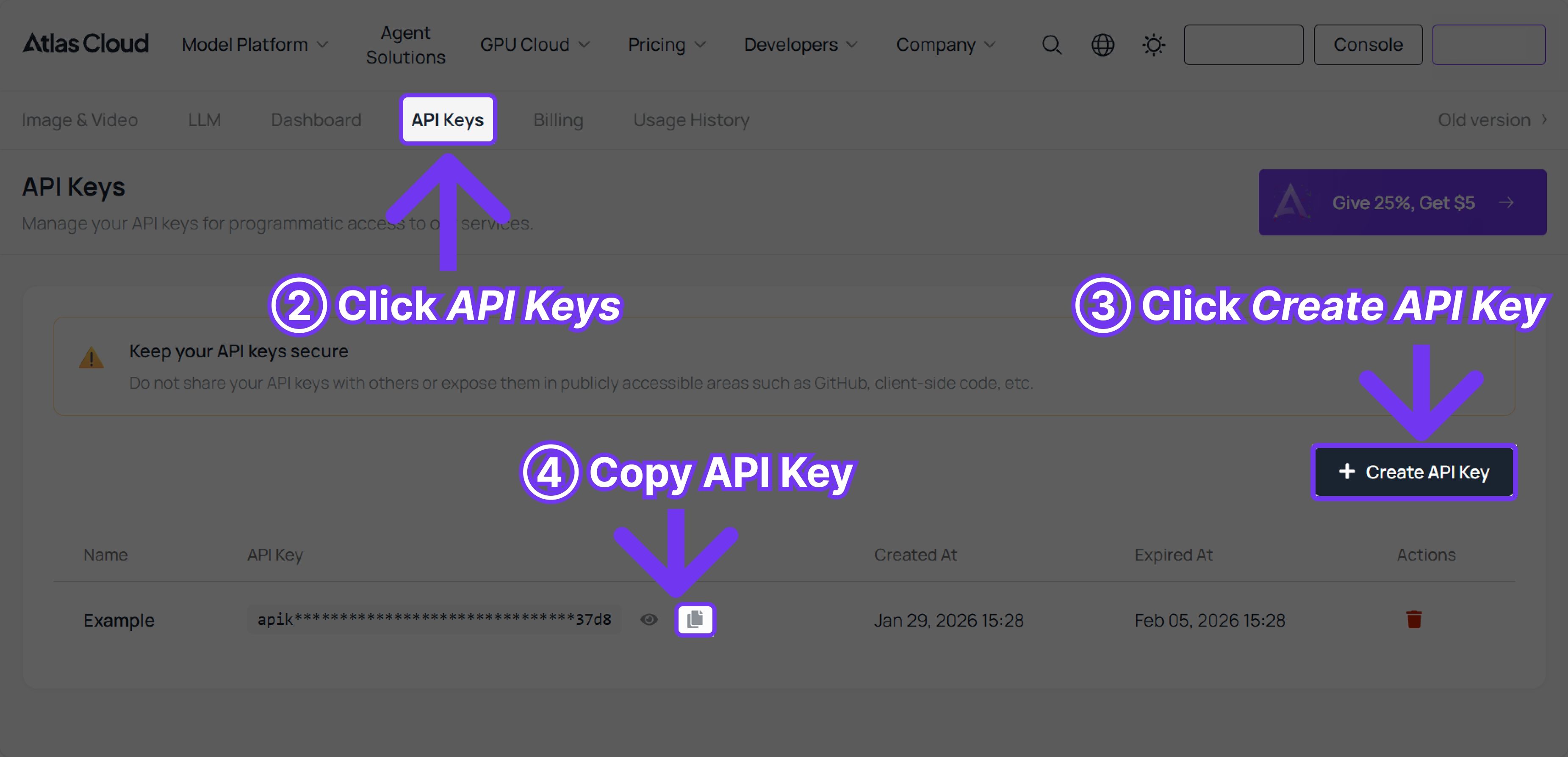

Step 1: Get your API key

Create an API key in your console and copy it for later use.

Step 2: Check the API documentation

Review the endpoint, request parameters, and authentication method in our API docs.

Step 3: Make your first request (Python example)

Example: generate a video with Seedance 1.5 Pro(image-to-video)

plaintext1import requests 2import time 3 4# Step 1: Start video generation 5generate_url = "https://api.atlascloud.ai/api/v1/model/generateVideo" 6headers = { 7 "Content-Type": "application/json", 8 "Authorization": "Bearer $ATLASCLOUD_API_KEY" 9} 10data = { 11 "model": "bytedance/seedance-v1.5-pro/image-to-video", 12 "aspect_ratio": "16:9", 13 "camera_fixed": False, 14 "duration": 5, 15 "generate_audio": True, 16 "image": "https://static.atlascloud.ai/media/images/06a309ac0adecd3eaa6eee04213e9c69.png", 17 "last_image": "example_value", 18 "prompt": "Use the provided image as the first frame.\nOn a quiet residential street in a summer afternoon, a young girl in high-quality Japanese anime style slowly walks forward.\nHer steps are natural and light, with her arms gently swinging in rhythm with her walk. Her body movement remains stable and well-balanced.\nAs she walks, her expression gradually softens into a gentle, warm smile. The corners of her mouth lift slightly, and her eyes look calm and bright.\nA soft breeze moves her short hair and headband, with individual strands subtly flowing. Her clothes show slight natural motion from the wind.\nSunlight comes from the upper side, creating soft highlights and natural shadows on her face and body.\nBackground trees sway gently, and distant clouds drift slowly, enhancing the peaceful summer atmosphere.\nThe camera stays at a medium to medium-close distance, smoothly tracking forward with cinematic motion, stable and controlled.\nHigh-quality Japanese hand-drawn animation style, clean linework, warm natural colors, smooth frame rate, consistent character proportions.\nThe mood is calm, youthful, and healing, like a slice-of-life moment from an animated film.", 19 "resolution": "720p", 20 "seed": -1 21} 22 23generate_response = requests.post(generate_url, headers=headers, json=data) 24generate_result = generate_response.json() 25prediction_id = generate_result["data"]["id"] 26 27# Step 2: Poll for result 28poll_url = f"https://api.atlascloud.ai/api/v1/model/prediction/{prediction_id}" 29 30def check_status(): 31 while True: 32 response = requests.get(poll_url, headers={"Authorization": "Bearer $ATLASCLOUD_API_KEY"}) 33 result = response.json() 34 35 if result["data"]["status"] in ["completed", "succeeded"]: 36 print("Generated video:", result["data"]["outputs"][0]) 37 return result["data"]["outputs"][0] 38 elif result["data"]["status"] == "failed": 39 raise Exception(result["data"]["error"] or "Generation failed") 40 else: 41 # Still processing, wait 2 seconds 42 time.sleep(2) 43 44video_url = check_status()