Key Takeaways

-

Compute now tops payroll for many AI startups, with hosting and GPU spend often eating half of revenue, far above classic SaaS margins.

-

Idle capacity is the silent drain. Average GPU utilization still hovers near 40%, forcing teams to overbuy or risk latency blow-ups when traffic surges.

-

GPU-first “neoclouds” are reshaping the market. Independent providers like CoreWeave help absorb spiky inference while hyperscalers handle steady workloads, flattening the overall cost curve.

-

Operational craft wins. Fine-grained telemetry, distributed inference tuning, and smart capacity deals can push utilization toward 70% and return margin straight to the P&L.

-

Compute economics is a customer-visible feature. Treating infrastructure as a living metric, not a sunk cost, reduces dilution, pleases users, and sharpens investor conversations.

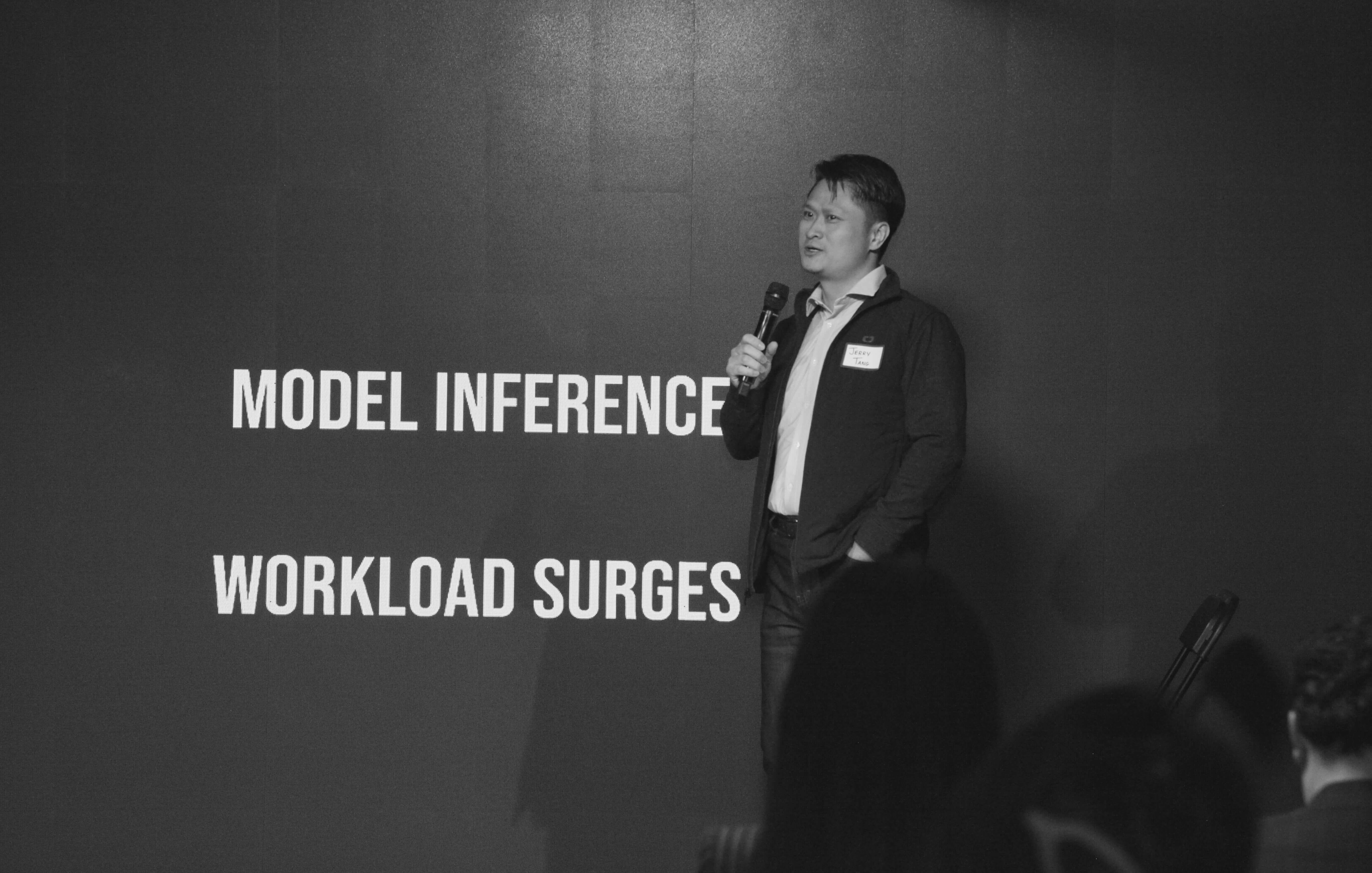

Jerry Tang, CEO of Atlas Cloud, 2025

When Atlas Cloud CEO Jerry Tang took the stage at a recent AI Summit, he went straight to the point, compute costs now outrank payroll as the biggest threat to a young AI company’s runway.

Founders once sweated payroll first, but for many AI companies the heaviest line now belongs to silicon.

A study of 125 venture-backed startups shows compute and hosting swallowing half of revenue, more than double the burden at classic SaaS peers Kruze Consulting. The pain is not easing.

A recent CEO survey projects an 89% jump in corporate compute spend between 2023 and 2025, driven mainly by generative-AI roll-outs.

Why Utilization Fails: The Spike Problem

Why does the bill feel so lopsided? Utilization rarely matches intent. GPUs sit idle until traffic suddenly spikes, then everything looks under-provisioned. That swing forces founders to choose between overbuying capacity or watching latencies balloon.

Nvidia’s Jensen Huang added perspective at GTC this spring, arguing that AI workloads will require roughly three times more data-center capex by 2028 than today if we expect models to stay responsive. In other words, the demand curve is still steeper than most cost curves.

A New Supply Base: GPU-First Neoclouds

When hyperscalers like Azure and AWS were the sole option, founders dealt with rising demand by chasing bigger rounds and bulk discounts. Today, GPU-focused neoclouds offer a smarter path, giving teams flexible capacity without the capital strain.

Two GPU-first clouds of note lead the neocloud pack are CoreWeave, the first non-hyperscaler to run a reliable 10 k-GPU deployment, and Atlas Cloud, which matches that scale while tuning its clusters for bursty workloads and tighter cost control.

CoreWeave’s own numbers hint at the opportunity, with their revenue leaping 420% year on year in its first quarter as a public company even as the share price bounced.

Complement, Don’t Replace: Multicloud in Practice

These neocloud specialists do not need to replace AWS or Azure to matter. A recent

TechInsights brief notes that GPU clouds increasingly play a complementary role in multicloud strategies, absorbing bursty inference while hyperscalers keep the steady workloads.

The effect is a blended cost curve that softens the peaks without forcing a wholesale migration.

Operational Craft Beats Raw Capacity

Execution still decides who wins. Teams that watch second-by-second telemetry, tune batch sizes, and lean on NCCL-backed distributed inference often push utilization from the typical 40% band toward 70% and beyond.

Others negotiate “capacity blocks” at neoclouds for predictable base load and let everything spiky float on cheaper spot inventory.

None of this is glamorous, yet every percentage point of efficiency returns margin straight to the P&L.

Capital Discipline: Growth Without Capex Bloat

The market is also teaching a capital lesson. Investors rewarded CoreWeave’s growth but questioned its heavy capex, reminding founders that scale itself is not a moat.

What impresses now is the craft of matching compute to demand hour by hour, region by region. That skill keeps dilution down and optionality high when the next fundraise or strategic pivot arrives.

Compute Economics as a Product Feature

Seen in this light, compute economics is less a back-office concern than a product feature. Customers feel it in latency and CFOs feel it in burn. Treating infrastructure as a living business metric, not a sunk cost, changes conversations with both users and investors.