Seedream 5.0 Image Models

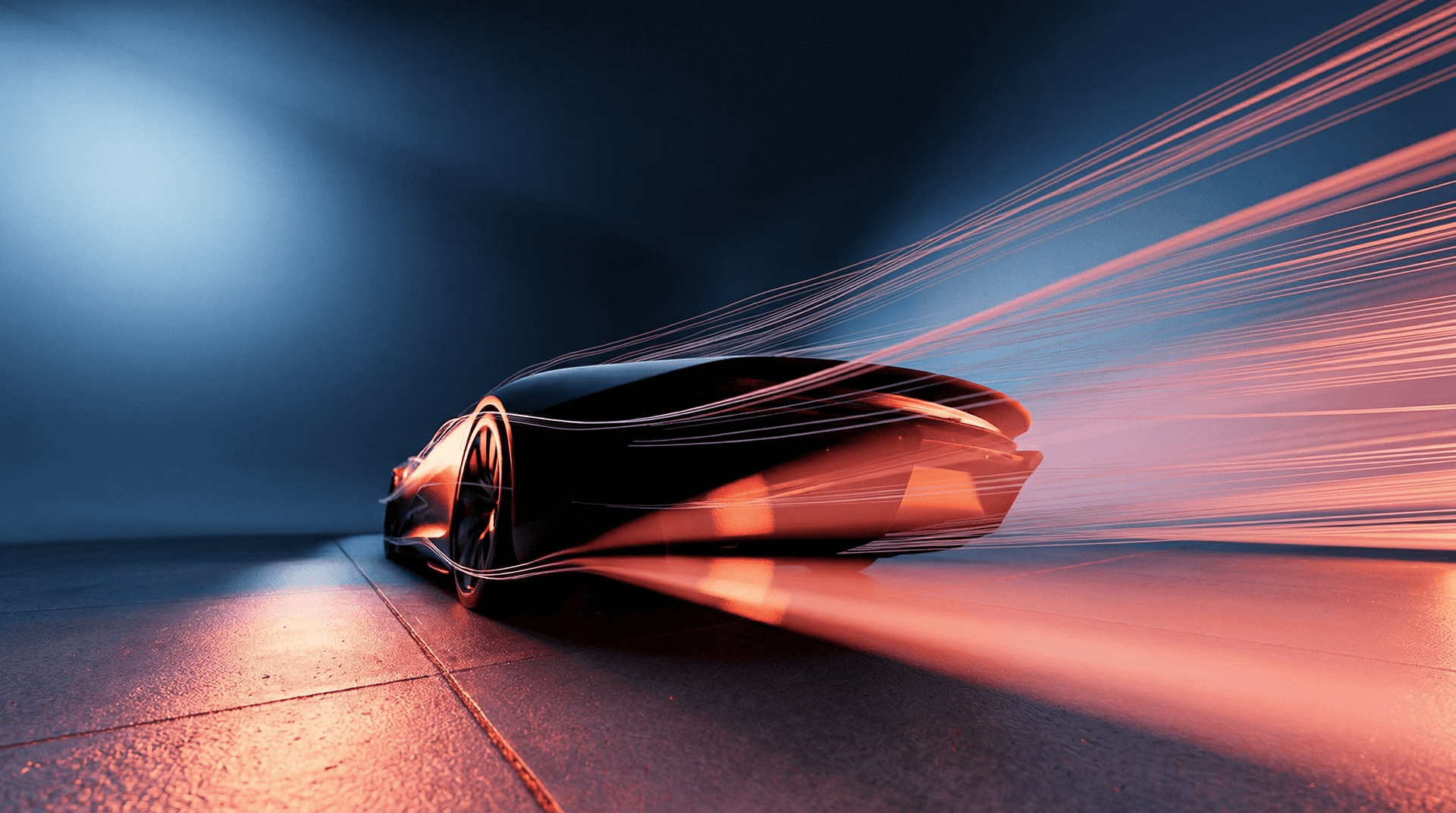

Seedream 5.0 (by ByteDance) 是新一代多模态视觉合成引擎。它通过突破性地融合实时网络检索与智能逻辑推理,能够精准理解物理规律和复杂指令。作为面向专业设计师和创作者的端到端视觉生产力工具,它赋能从灵感迸发到即时生成及精准编辑的全流程工作流。

探索领先模型

Atlas Cloud 为您提供最新的行业领先创意模型。

Seedream v4.5

ByteDance latest image generation model achieving all-round improvements. Excels at typography, poster design, and brand visual creation with superior prompt adherence.

Seedream v4.5 Edit

ByteDance advanced image editing model that preserves facial features, lighting, and color tones while enabling professional-quality modifications.

Seedream v4.5 Sequential

ByteDance latest image generation model with batch generation support. Generate up to 15 images in a single request.

Seedream v4.5 Edit Sequential

ByteDance advanced image editing model with batch generation support. Edit multiple images while preserving facial features and details.

Seedream 5.0 Image Models 的核心亮点

Atlas Cloud 为您提供业界领先的最新创意模型。

实时趋势捕捉

配备网络感知能力,将实时全球趋势和热门话题整合到创作中,打破知识时效性的障碍。

深度逻辑推理

超越传统图像生成,具备空间思维和多步推理能力,擅长处理复杂构图和物理世界规律。

精准可控编辑

支持精确修改和严格遵循指令,实现完美的局部重绘,同时保持绝对的主体一致性。

电影级超高清画质

提供精致的细节以及专业级的光影和纹理,满足高保真商业产出的严苛需求。

专业文本与排版

在文本渲染和排版方面具备卓越的审美能力,能够生成包含密集文本的海报和图表等复杂设计素材。

使用 Seedream 5.0 Image Models 可以做什么

Atlas Cloud 为您提供业界领先的最新创意模型。

通过结合实时热门梗图和时事热点,快速生成高质量的社交媒体素材。

生成包含逻辑关系的示意图、包装设计以及带有精准文字的宣传材料。

针对商品进行精确的背景替换、风格迁移和细节精修,从而显著提升转化率。

保持高度一致的角色形象,不仅能生成角色设定图,更能创作连贯的叙事分镜。

为何在 Atlas Cloud 使用 Seedream 5.0 Image Models

将先进的 Seedream 5.0 Image Models 模型与 Atlas Cloud 的 GPU 加速平台相结合,提供无与伦比的性能、可扩展性和开发体验。

性能与灵活性

低延迟:

GPU 优化推理,实现实时响应。

统一 API:

一次集成,畅用 Seedream 5.0 Image Models、GPT、Gemini 和 DeepSeek。

透明定价:

按 Token 计费,支持 Serverless 模式。

企业与规模

开发者体验:

SDK、数据分析、微调工具和模板一应俱全。

可靠性:

99.99% 可用性、RBAC 权限控制、合规日志。

安全与合规:

SOC 2 Type II 认证、HIPAA 合规、美国数据主权。

探索更多系列

Seedream 5.0 Image Models

Seedream 5.0 (by ByteDance) is a next-generation multimodal visual synthesis engine. By groundbreakingly fusing real-time web retrieval with intelligent logical reasoning, it precisely comprehends physical laws and complex instructions. It serves as an end-to-end visual productivity powerhouse for professional designers and creators—empowering the entire workflow from the spark of inspiration to instant generation and precision editing.

Seedance 2.0 Video Models

Seedance 2.0(by Bytedance) is a multimodal video generation model that redefines "controllable creation," moving beyond the limitations of text or start/end frames. It supports quad-modal inputs—text, image, video, and audio—and introduces an industry-leading "Universal Reference" system. By precisely replicating the composition, camera movement, and character actions from reference assets, Seedance 2.0 solves critical issues with character consistency and physical coherence, empowering creators to act as true "directors" with deep control over their output.

Vidu Video Models

Vidu (by ShengShu Technology) is a foundational video model built on the proprietary U-ViT architecture, combining the strengths of Diffusion and Transformer models. It features superior semantic understanding and generation capabilities, producing coherent, fluid visuals that adhere to physical laws without the need for interpolation. With exceptional spatiotemporal consistency and a deep understanding of diverse cultural elements, Vidu empowers professional filmmakers and creators with a stable, efficient, and imaginative tool for video production.

MiniMax LLM Models

MiniMax is a large language model developed by MiniMax AI, focused on efficient reasoning, long-context understanding, and scalable text generation. It is designed for complex tasks such as dialogue systems, document analysis, content creation, and AI agents. With an emphasis on high performance at lower computational cost, MiniMax is well suited for enterprise applications and developer use cases where stability, efficiency, and cost control are important.

GLM LLM Models

GLM (General Language Model) is a large language model developed by ZAI (Zhipu AI) for text understanding, generation, and reasoning. It supports both Chinese and English and performs well in dialogue, content creation, translation, and code assistance. GLM is widely used in chatbots, enterprise AI systems, and developer applications due to its stable performance and versatility.

Moonshot LLM Models

Kimi is a large language model developed by Moonshot AI, designed for reasoning, coding, and long-context understanding. It performs well in complex tasks such as code generation, analysis, and intelligent assistants. With strong performance and efficient architecture, Kimi is suitable for enterprise AI applications and developer use cases. Its balance of capability and cost makes it an increasingly popular choice in the LLM ecosystem.

Open AI Model Families

Explore OpenAI’s language and video models on Atlas Cloud: ChatGPT for advanced reasoning and interaction, and Sora-2 for physics-aware video generation.

Van Video Models

Van Model is a flagship video model family, perfectly retaining the cinematic visuals and complex dynamics of 3D VAE and Flow Matching. By leveraging proprietary compute distillation, it breaks the "quality equals cost" barrier to deliver extreme inference speeds and ultra-low costs. This makes Van the premier engine for enterprises and developers seeking high-frequency, scalable video production on a budget.

Kling 3.0 Video Models

Kling AI Video 3.0 (by Kuaishou) is a groundbreaking model designed to bridge the worlds of sound and visuals through its unique Single-pass architecture. By simultaneously generating visuals, natural voiceovers, sound effects, and ambient atmosphere, it eliminates the disjointed workflows of traditional tools. This true audio-visual integration simplifies complex post-production, providing creators with an immersive storytelling solution that significantly boosts both creative depth and output efficiency.

Veo3.1 Video Models

Veo 3.1 (by Google) is a flagship generative video model that sets a new standard for cinematic AI by deeply integrating semantic capabilities to deliver cinematic visuals, synchronized audio, and complex storytelling in a single workflow. Distinguishing itself through superior adherence to cinematic terminology and physics-based consistency, it offers professional filmmakers an unparalleled tool for transforming scripts into coherent, high-fidelity productions with precise directorial control.

Sora-2 Video Models

The Sora-2 family from OpenAI is the next-generation video + audio generation model, enabling both text-to-video and image-to-video outputs with synchronized dialogue, sound effect, improved physical realism, and fine-grained control.

Nano Banana Image Models

Nano Banana is a fast, lightweight image generation model for playful, vibrant visuals. Optimized for speed and accessibility, it creates high-quality images with smooth shapes, bold colors, and clear compositions—perfect for mascots, stickers, icons, social posts, and fun branding.