Atlas Cloud is integrating the highly anticipated GLM-5, further expanding the powerful Generative AI landscape.

- Model Positioning: Created by ZHIPU AI(Z.ai), this new flagship model represents a qualitative leap over the solid foundation of the GLM-4 series. With a massive 744 Billion parameter MoE architecture and breakthrough "Slime" post-training technology, GLM-5 pushes complex reasoning, coding, and Agentic capabilities to new heights.

- Core Features: Built specifically for high-difficulty tasks, GLM-5 is designed to effortlessly navigate complex system engineering, long-horizon autonomous agent workflows, and deep logical problem-solving.

- Price: $0.8 per 2.56M Tokens (Input/Output)

Core Technology: Tighter Logic and Smarter Coding with Slime & MoE Architecture

744B Parameter MoE Architecture & Massive Data Foundation

GLM-5 achieves a magnitude-level jump in model scale and data breadth, establishing itself as the new "benchmark for open weights."

- Ultra-Large Scale: The model expands to 744 billion parameters (approx. 40B active parameters) using a Mixture-of-Experts (MoE) architecture. Compared to Llama-3 405B or DeepSeek-V3, GLM-5 holds a significant advantage in capacity, accommodating a vaster repository of world knowledge.

- Trillion-Level Token Training: Pre-trained on a staggering 28.5 Trillion tokens.

- Deployment Advantage: Access directly via Atlas Cloud’s OpenAI-compatible API to enjoy enterprise-grade inference speeds without the infrastructure headache.

Breakthrough "Agentic" System Engineering Capabilities

GLM-5 is engineered for long-horizon, complex systemic tasks.

- Long-Horizon Planning: For tasks requiring dozens of reasoning steps (e.g., automated software development, legal document drafting), GLM-5 demonstrates exceptional stability.

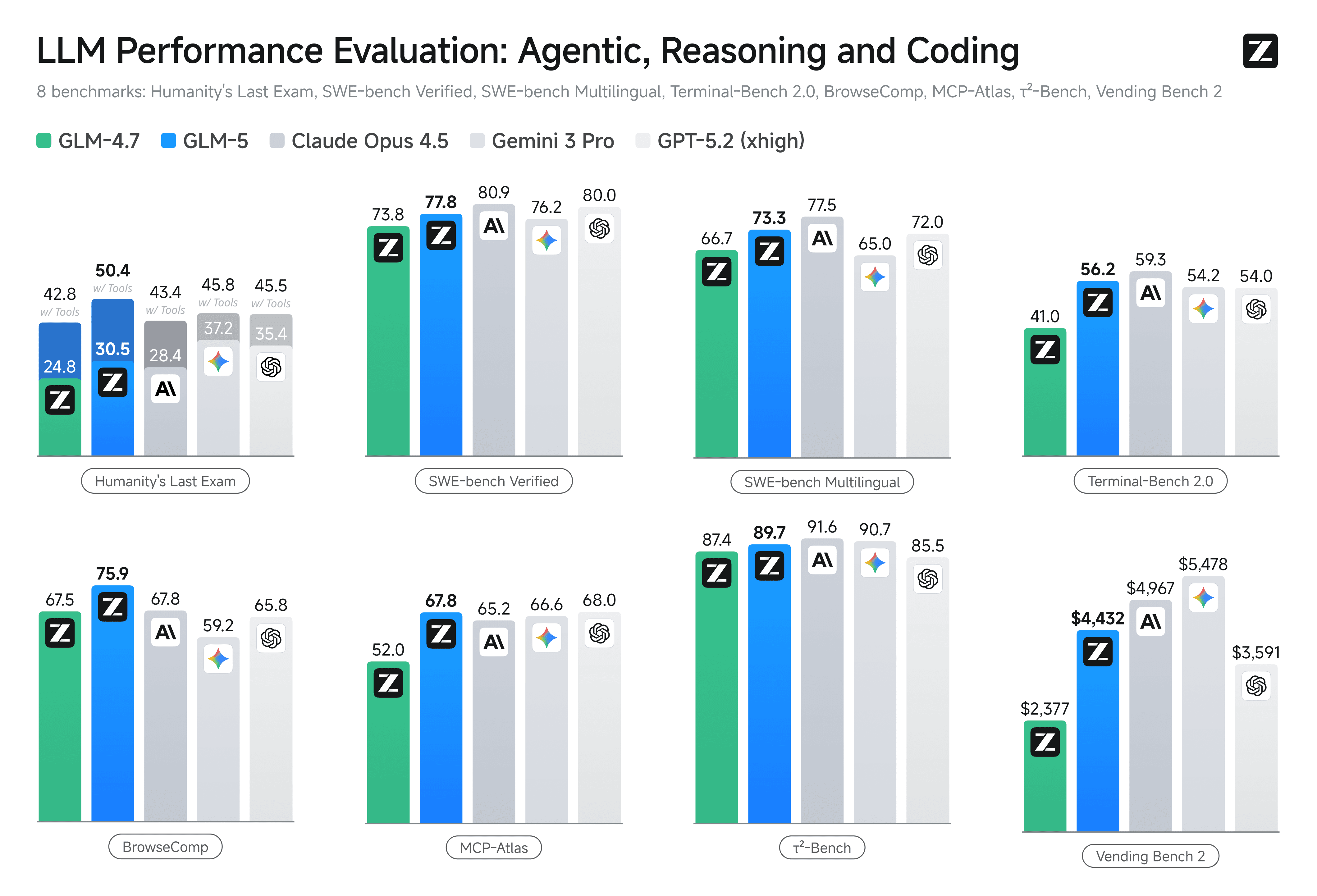

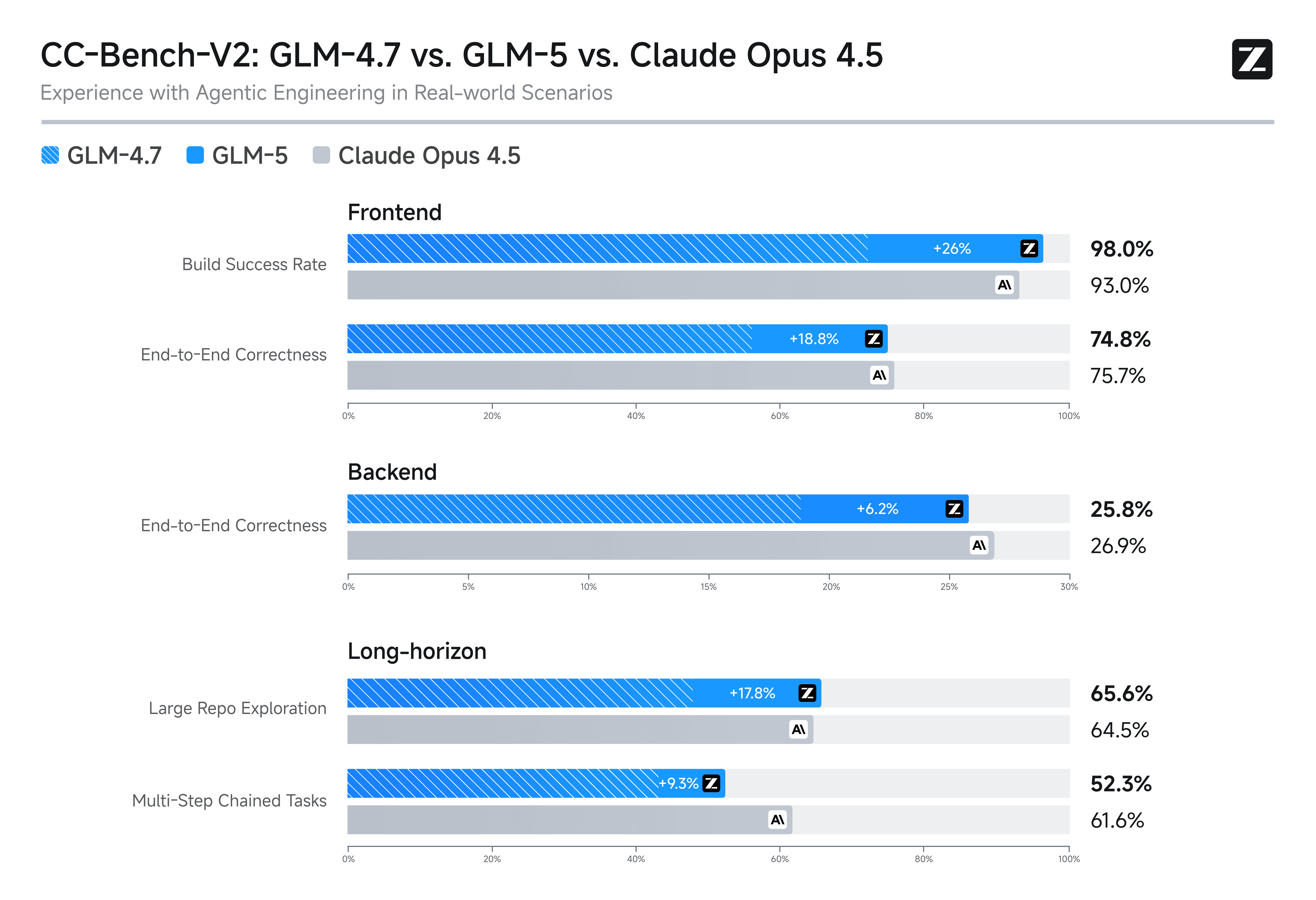

- Performance Comparison: In official evaluations, its agentic capabilities have significantly narrowed the gap with top-tier closed-source models like Claude Opus 4.5, Gemini 3 Pro, and GPT-5.2 (xhigh). It outperforms current SOTA open-source models on benchmarks like Vending Bench.

- API Use Cases: For developers building autonomous AI Agents, Atlas Cloud’s high-concurrency API allows you to replicate a Devin-like automated programming experience at a lower cost, without managing complex model backends.

"Slime" Asynchronous RL & The Leap in Programming Logic

Zhipu AI introduces a novel post-training technology in GLM-5, significantly boosting logical rigor and code generation quality.

- Technical Innovation: Introduces "Slime," an asynchronous Reinforcement Learning (RL) infrastructure that solves the inefficiency of post-training large-scale models.

- Capability Jump: In internal and academic benchmarks, GLM-5 drastically outperforms its predecessor, GLM-4.7, in frontend/backend development and long-term planning, ranking first among open-source models.

- Ease of Integration: Its high-precision coding and logic make it an ideal backend for coding assistants. Via Atlas Cloud, users can seamlessly switch between GLM-5 and other vision/language models (like Qwen、MiniMax) to benchmark performance and verify GLM-5's superiority in specific business logic.

Real-World Applications: Automating Legacy Code Refactoring & Expert-Level Research Analysis

Complex Software Engineering & Automated "Legacy Code" Refactoring

Senior developers often suffer through inheriting "spaghetti code" (missing documentation, chaotic logic). Standard models, limited by context windows or reasoning depth, often fail to understand full-repo dependencies, generating code that doesn't compile or introduces new bugs.

- Repository-Level Understanding & Repair: Thanks to the 744B architecture and long-context capabilities, GLM-5 acts as an "AI Architect." Users can upload an entire project repository; the model analyzes coupling between modules and automatically plans and executes cross-file refactoring (e.g., splitting a monolith into microservices).

- Seamless IDE Integration: Use Atlas Cloud’s API to integrate GLM-5’s reasoning into local workflows with minimal migration cost, shifting from "AI coding assistance" to "Autonomous Bug Fixing."

- End-to-End Test Generation: For legacy systems lacking test cases, GLM-5 can reverse-engineer business rules from logic to write high-coverage unit tests, drastically reducing maintenance risks.

Deep Research Analysis & Cross-Lingual Report Synthesis

Analysts and researchers face information overload. Traditional LLMs often struggle with deep reasoning, verifying data authenticity, and avoiding hallucinations in multi-lingual long documents.

- Deep Reasoning on Massive Knowledge: With 28.5T tokens of training, GLM-5 possesses near-omniscient data reserves. In complex industry analysis, it goes beyond summarization to identify logical loopholes in reports and combine historical data to deduce market trends, offering "Expert Intuition" rather than simple text stacking.

- High Concurrency & Low Latency: For institutions monitoring global sentiment in real-time, deploying super-large models is cost-prohibitive. Through Atlas Cloud’s high-availability API, users can process hundreds of multi-lingual documents concurrently using elastic cloud compute, eliminating the need for expensive GPU clusters.

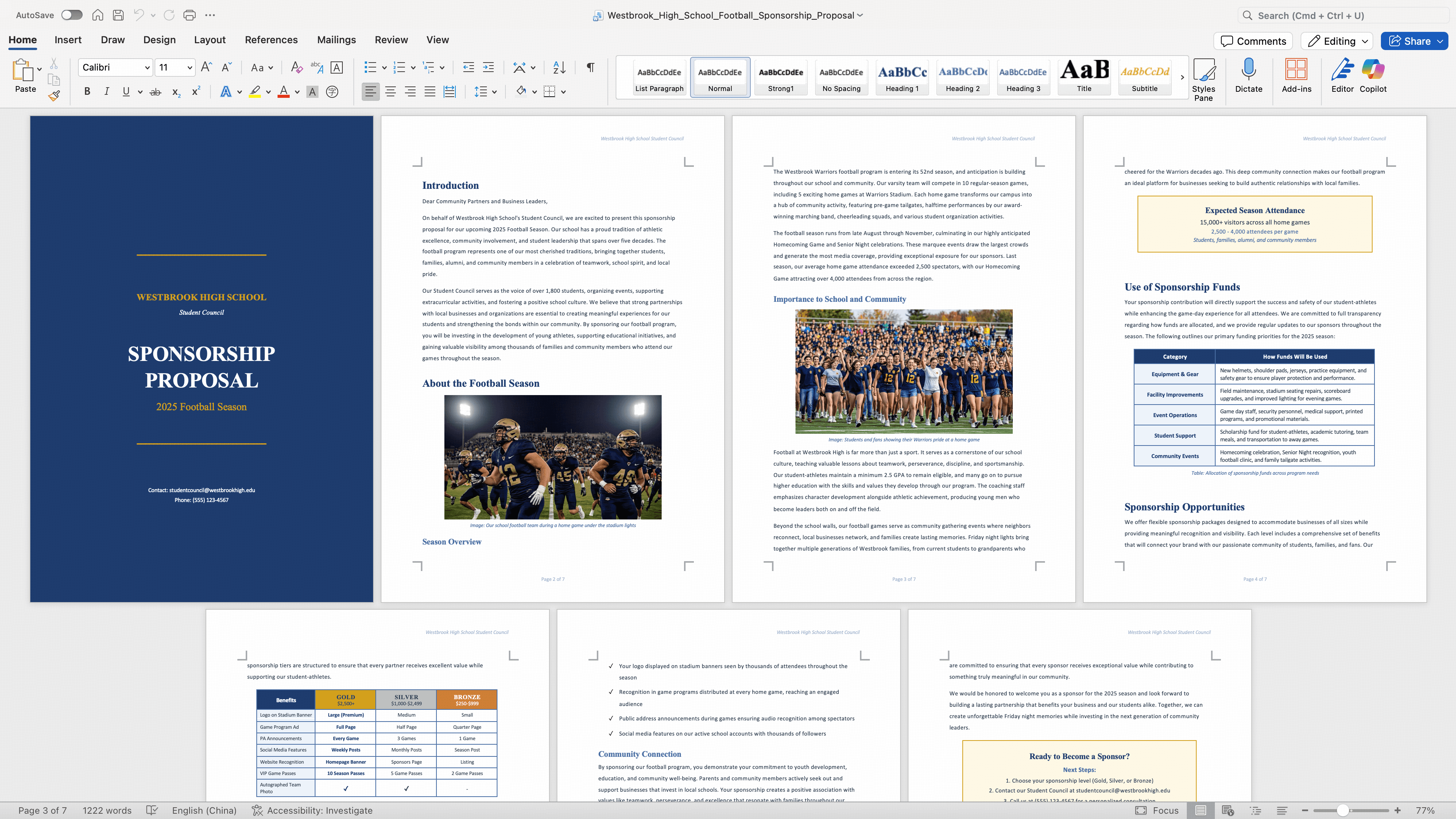

- Structured Data Cleaning: GLM-5’s superior instruction-following allows it to precisely extract data (e.g., revenue, growth rates) from unstructured news or PDFs and output formatted documents in PDF, Word, or Excel.

- ↓ Document (.docx) generated by GLM-5

Atlas Cloud: The Best way to Master GLM-5

Say goodbye to tedious API key management and platform switching. On Atlas Cloud, you can:

- One-Click Concurrent Testing: Instantly switch between GLM-5、DeepSeek、MiniMax, and other mainstream models to answer the same Prompt simultaneously.

- Ultimate Cost-Efficiency: Match the perfect result to your needs with the lowest Token budget.

How to Use GLM-5 on Atlas Cloud

Atlas Cloud lets you use models side by side — first in a playground, then via a single API.

Method 1: Use directly in the Atlas Cloud playground

Method 2: Access via API

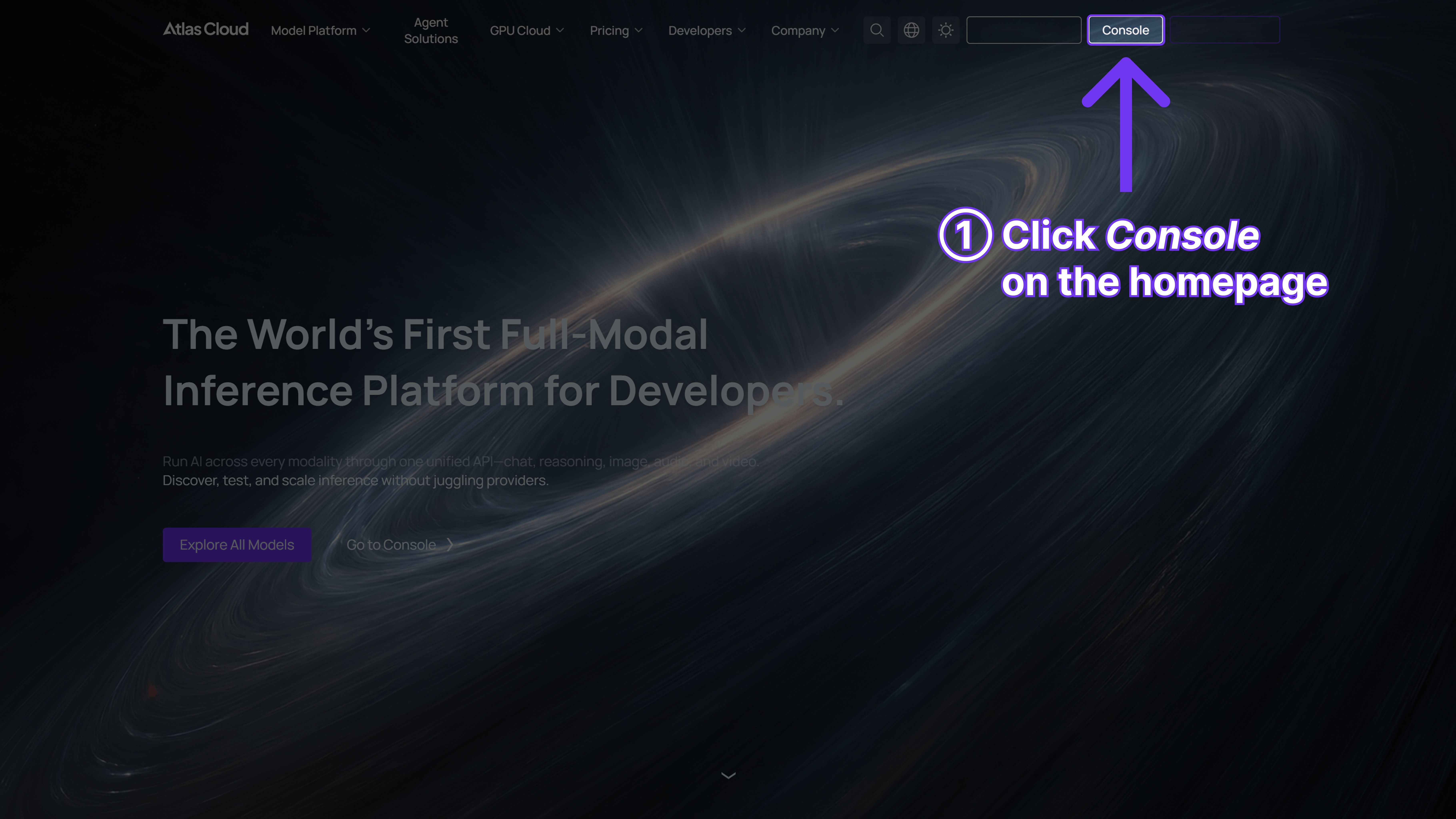

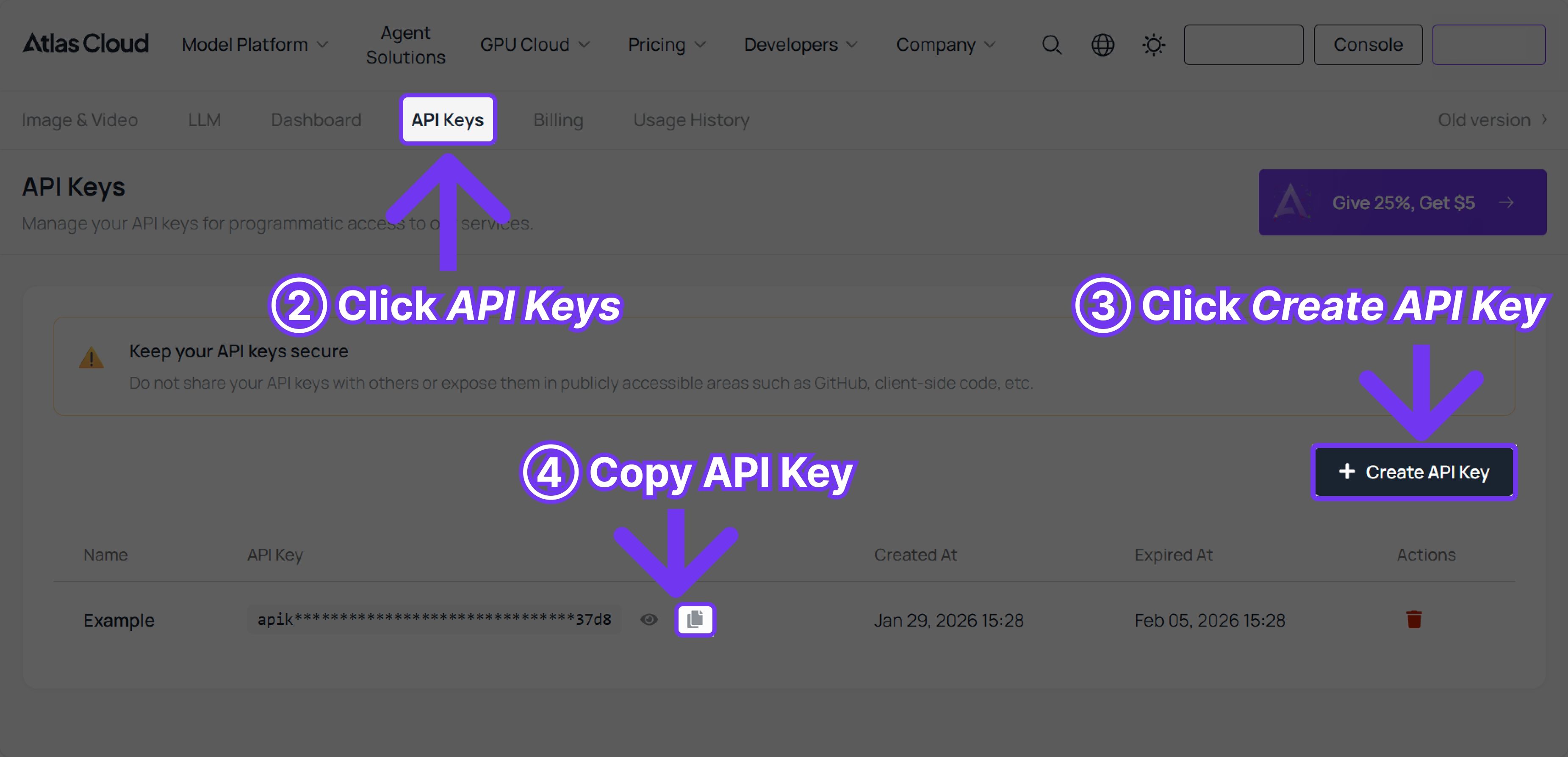

Step 1: Get your API key

Create an API key in your console and copy it for later use.

Step 2: Check the API documentation

Review the endpoint, request parameters, and authentication method in our API docs.

Step 3: Make your first request (Python example)

An example of GLM-5:

plaintext1import requests 2 3url = "https://api.atlascloud.ai/v1/chat/completions" 4headers = { 5 "Content-Type": "application/json", 6 "Authorization": "Bearer $ATLASCLOUD_API_KEY" 7} 8data = { 9 "model": "zai-org/glm-5", 10 "messages": [ 11 { 12 "role": "user", 13 "content": "what is difference between http and https" 14 } 15 ], 16 "max_tokens": 1024, 17 "temperature": 0.7, 18 "stream": True 19} 20 21response = requests.post(url, headers=headers, json=data) 22print(response.json())