Kimi K2.5 on Atlas Cloud: Unlocking Native Vision, 1T MoE & Swarm Intelligence

Snapshot

$0.56/2.8M in/out

AtlasCloud is expanding its generative AI ecosystem with the upcoming integration of Kimi K2.5.

- Model Definition: Developed by Moonshot AI, Kimi K2.5 elevates the multimodal foundation of the Kimi series through a Native Vision and Mixture-of-Experts (MoE) architecture.

- Core Benefits: Optimized for complex video stream analysis, automated agentic task execution, and high-aesthetic programming development.

- Availability: Available NOW!

While Kimi K2 set the industry standard for long-context processing, Kimi K2.5 utilizes its comprehensive reasoning and execution capabilities to drive the next generation of AI development.

Core Capabilities: From Conversational AI to Multimodal Execution

Kimi K2.5 represents a qualitative shift from a "single-dialogue interface" to a "comprehensive multimodal executor."

Agentic Intelligence: Swarm Thinking & Advanced Reasoning

Kimi K2.5 breaks the "single-threaded thought" limitation of traditional LLMs by introducing biologically inspired collaborative intelligence.

- Core Analysis:

- Agent Swarm Technology: A "self-directed" parallel processing system. The model decomposes complex objectives and orchestrates up to 100 sub-agents to collaborate in parallel. This extends Kimi’s signature "Thinking Mode" into tangible task execution.

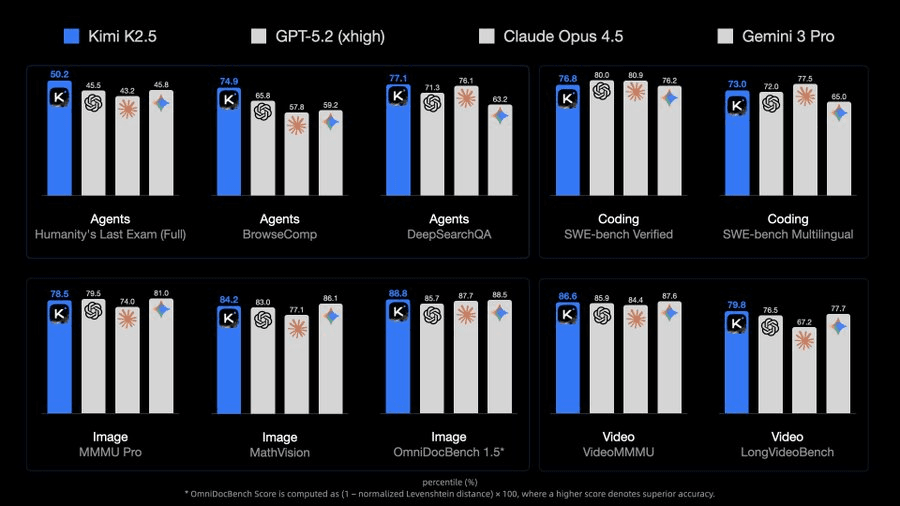

- Complex Task Dominance: Achieving a score of 50.2% on the HLE (Humanity's Last Exam) benchmark, it proves capable of planning long-chain, multi-step complex instructions that surpass previous models.

- Performance Comparison:

- Vs. Single-Agent: Delivers 4.5x faster execution speeds and handles task complexity far beyond Kimi K2 or standard GPT-4 class models.

- Vs. Competitors: Sets a global benchmark in agentic capabilities, outperforming top-tier competitors (including simulated environments for GPT-5.2 and Claude 4.5).

Native Multimodal Vision: Direct Video & Image Analysis

- Core Analysis:

- Video as Input: Capable of processing direct video streams, not just static images. Pre-trained on 15T mixed text-image tokens, it deeply analyzes dynamic changes and logical relationships within videos.

- The Atlas Cloud Advantage: This makes it the perfect partner for Atlas Cloud’s video generation tools. Users can run multiple video models simultaneously on the platform, select the best results, and immediately feed them to Kimi for analysis—eliminating the need to switch between tools.

- Precision Layout Interpretation: Achieves Open-Source SOTA on benchmarks like MMMU Pro (78.5%) and VideoMMMU (86.6%), enabling the interpretation of precise architectural and design layouts.

- Performance Comparison:

- Vs. Bolt-on Vision Models: Unlike early multimodal models that "glued" vision encoders to LLMs, K2.5 features native fusion, eliminating "hallucinations" and comprehension disconnects.

- Vs. Kimi K2: Evolves from a "Long-Text King" to a "Full-Sensory Generalist," bridging the perception gap of text-only models.

Code with Taste: Aesthetic Engineering & Frontend Generation

Kimi K2.5 elevates coding capabilities from functional implementation to the dimension of "Interactive Aesthetics."

- Core Analysis:

- Visual-to-Code Transformation: Users can upload design mockups or demo videos, and K2.5 instantly converts them into functional code.

- High-End Effects Support: Specifically optimized for complex 3D libraries like Three.js and dynamic effects. It generates "Expressive Motion" web pages that are not just functional, but visually stunning.

- Engineering Rigor: Scoring 76.8% on SWE-bench Verified, it proves it can handle serious backend logic alongside aesthetic frontend tasks.

- Performance Comparison:

- Vs. Standard Coding Models: While models like CodeLlama ensure code "doesn't crash," K2.5 ensures code "looks good" and follows "visual logic"—a massive leap in usability.

Architectural Revolution: MoE Efficiency & Generational Performance Leaps

Based on a Mixture-of-Experts (MoE) architecture, Kimi K2.5 balances computational efficiency with intelligence density, marking a shift from linear growth to exponential capability leaps.

- Core Analysis:

- The MoE Dividend: Utilizes a 1 Trillion (1T) parameter architecture but activates only 32B parameters during inference.

- Cost-Effective Scaling: This high-efficiency design aligns perfectly with AtlasCloud’s low-cost infrastructure. Users can visually compare "Generation Quality vs. Cost" side-by-side, achieving premium output for minimal expense, all while accessing the model via OpenAI-compatible APIs.

- Performance Comparison:

- Vs. Kimi K2: A complete intelligent reconstruction. While K2 specialized in depth, K2.5 offers explosive growth in reasoning breadth and execution.

- Vs. Traditional LLM APIs: Thanks to MoE, K2.5 offers superior inference efficiency compared to GPT-4 level intelligence, making it the ideal foundation for high-performance AI applications.

Real-World Implementation: Building Scalable Agents & Multimodal Apps

The power of Kimi K2.5 lies not just in its specs, but in how it transforms daily workflows. Here are three specific application scenarios:

Multimodal Workflows: Streamlined Content Analysis & Selection

- Deep Video Understanding: Directly analyze dynamic logic within video streams, bypassing tedious pre-processing steps.

- Collaborative Model Filtering: Leverage AtlasCloud to generate video assets in parallel, then use Kimi K2.5 to rapidly filter and select the highest quality output.

- Smart Cost Optimization: Visually compare the output quality and price of different models to find the optimal ROI for your project.

Visual-Driven Development: From Design to Interactive Code

- Design Restoration: Instantly convert static images or video demos into frontend code complete with Three.js motion effects.

- Universal API Integration: Use OpenAI-compatible interfaces to easily plug this high-level programming capability into your existing development tools (VS Code, Cursor, etc.).

- High-Performance Inference: Handle complex, production-level code logic with ultra-low latency thanks to the MoE architecture.

Automated Agent Clusters: Scalable Task Execution

- Parallel Swarm Execution: Decompose massive tasks and assign them to hundreds of sub-agents simultaneously to drastically improve completion speed.

- Long-Range Logic Planning: Maintain exceptional logical consistency when executing multi-step, complex instructions.

- High-Concurrency Processing: Rely on AtlasCloud’s robust infrastructure to run large-scale agent networks and fully automate business processes.

Ready to integrate these capabilities? Let's look at how to quickly configure your environment on AtlasCloud.

Atlas Cloud: The best way to use Kimi K2.5

What to Expect in Atlas Cloud

Cost Efficiency & Speed

Focuses on maximizing output value and reducing wait times for users.

- Competitive Pricing: improved price-performance ratio for cost-effective generation.

- Accelerated Rendering: faster generation speeds that support rapid project turnaround.

Workflow Integration & API

Designed to fit into technical pipelines and support downstream tasks.

- Flexible Workflows: supports usage alongside other generative models.

- Actionable Outputs: facilitates immediate post-processing or modification of results.

- API Access: provides developer interfaces for automation and application integration.

How to Use Kimi K2.5 on Atlas Cloud

Atlas Cloud lets you use models side by side — first in a playground, then via a single API.

Method 1: Use directly in the Atlas Cloud playground

Method 2: Access via API

Step 1: Get your API key

Create an API key in your console and copy it for later use.

Step 2: Check the API documentation

Review the endpoint, request parameters, and authentication method in our API docs.

Step 3: Make your first request (Python example)

Example: generate a video with Kimi K2.5

plaintext1import requests 2 3# Vision Understanding Example 4# Image: Use base64 encoding (data:image/png;base64,...) 5# Video: Use URL (recommended for large files) 6 7url = "https://api.atlascloud.ai/v1/chat/completions" 8headers = { 9 "Content-Type": "application/json", 10 "Authorization": "Bearer $ATLASCLOUD_API_KEY" 11} 12data = { 13 "model": "moonshotai/kimi-k2.5", 14 "messages": [ 15 { 16 "role": "user", 17 "content": [ 18 { 19 "type": "image_url", 20 "image_url": { 21 "url": "data:image/png;base64,<BASE64_IMAGE_DATA>" 22 } 23 }, 24 { 25 "type": "video_url", 26 "video_url": { 27 "url": "https://example.com/your-video.mp4" 28 } 29 }, 30 { 31 "type": "text", 32 "text": "Please describe the content of this image/video" 33 } 34 ] 35 } 36 ], 37 "max_tokens": 32768, 38 "temperature": 1, 39 "stream": True 40} 41 42response = requests.post(url, headers=headers, json=data) 43print(response.json())