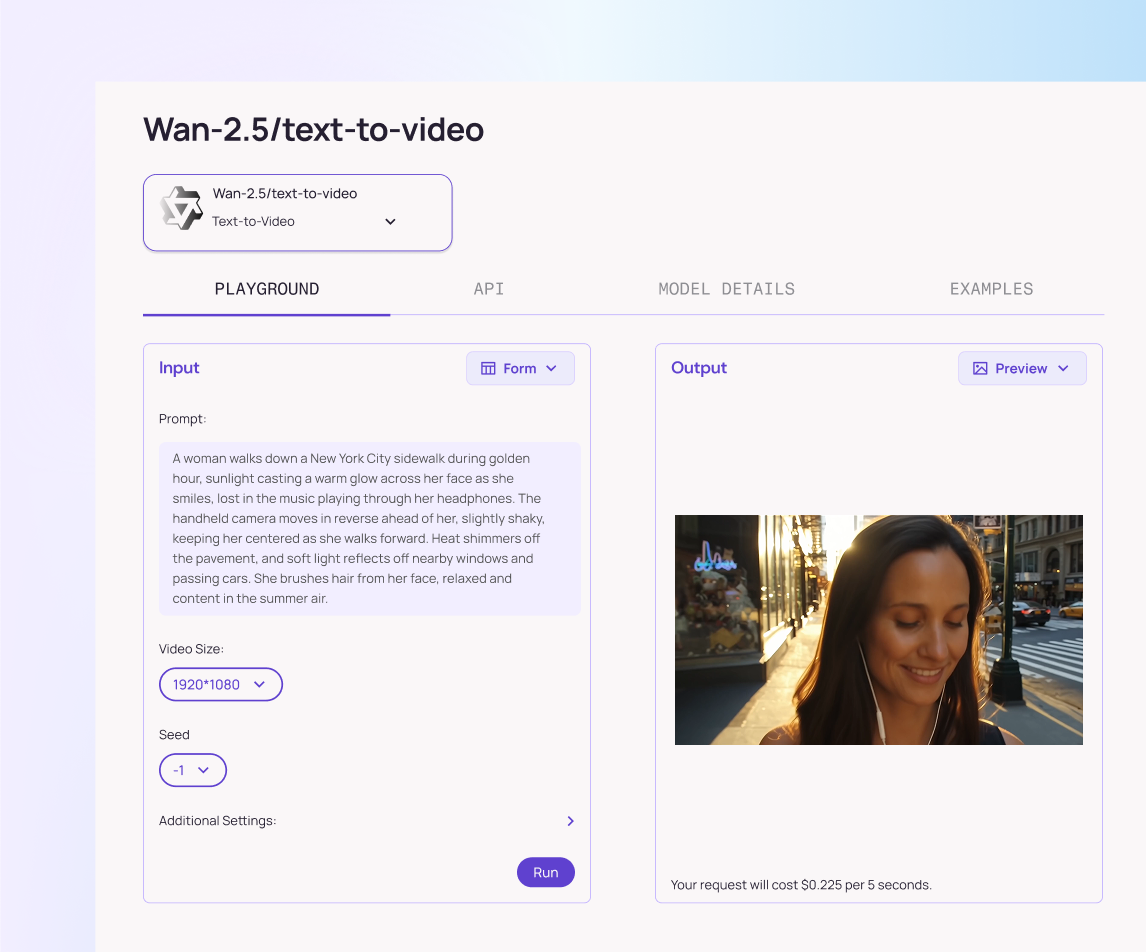

Wan2.5 Video Models

Wan 2.5 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. It delivers realistic motion, natural lighting, and strong prompt alignment across 480p to 1080p outputs—ideal for creative and production-grade workflows.

Explore the Leading Wan2.5 Video Models

Wan-2.5 Text-to-video Fast

Convert prompts into cinematic video clips with synchronized sound. Wan 2.5 generates 480p/720p/1080p outputs with stable motion, native audio sync, and prompt-faithful visual storytelling.

Wan-2.5 Text-to-video

A speed-optimized text-to-video option that prioritizes lower latency while retaining strong visual fidelity. Ideal for iteration, batch generation, and prompt testing.

Wan-2.5 Image-to-video

Bring static images to life with dynamic motion, lighting consistency, and synchronized audio. This variant smoothly animates reference visuals into short video sequences.

Wan-2.5 Image-to-video Fast

Get animated visuals from your images faster without major quality sacrifice. Perfect for preview workflows, previews at scale, or mass production of animated assets.

What Makes Wan2.5 Video Models Stand Out

Native A/V Sync

Generates perfectly aligned visuals and sound without extra editing.

Unified Multimodal Core

Handles text, image, video, and audio in one seamless model.

Extended Clip Duration

Creates up to 10-second videos for richer storytelling.

Flexible Prompt Inputs

Works from text or images to generate or animate content.

Flexible Prompt Inputs

Understands Chinese, English, and other languages natively.

Cinematic Control

Direct camera motion, pacing, and composition right from your prompt.

What You Can Do with Wan2.5 Video Models

Generate cinematic clips between 5 to 10 seconds length at 480p, 720p, or 1080p resolutions.

Sync visuals and audio in one pass — lip-sync, dialogue, sound effects and background music come together automatically.

Animate any image by turning still visuals into dynamic motion with matching audio cues.

Localize with multilingual prompts: Chinese, English, and more are supported natively.

Preview ideas rapidly with a fast-iteration variant of models to test concepts before full render.

Why Use Wan2.5 Video Models on Atlas Cloud

Combining the advanced Wan2.5 Video Models models with Atlas Cloud's GPU-accelerated platform provides unmatched performance, scalability, and developer experience.

Reality in motion. Powered by Atlas Cloud, Wan 2.5 brings life, light, and emotion into every frame with natural precision.

Performance & flexibility

Low Latency:

GPU-optimized inference for real-time reasoning.

Unified API:

Run Wan2.5 Video Models, GPT, Gemini, and DeepSeek with one integration.

Transparent Pricing:

Predictable per-token billing with serverless options.

Enterprise & Scale

Developer Experience:

SDKs, analytics, fine-tuning tools, and templates.

Reliability:

99.99% uptime, RBAC, and compliance-ready logging.

Security & Compliance:

SOC 2 Type II, HIPAA alignment, data sovereignty in US.

Explore More Families

Only at Atlas Cloud.