Introduction: The Coming Seedream 5.0 in Mid-February

With Seedream 4.5 having been released only recently, rumors about the launch of Seedream 5.0 in mid-February are already spreading like wildfire.

As one of the "Big Three" in the AIGC domain—alongside Google's Nano Banana Pro and OpenAI's GPT-Image—every update from ByteDance signifies a major shift in the generation paradigm.

Atlas Cloud will launch the Seedream 5.0 API on Day 0. We have fully integrated the Seedream series, including the latest Seedream 4.5, and will continue to deeply test and optimize the runtime environment for the upcoming release.

If you care about generation speed, multimodal fusion, and precision UI rendering, this predictive guide is your best preparation for the 5.0 era.

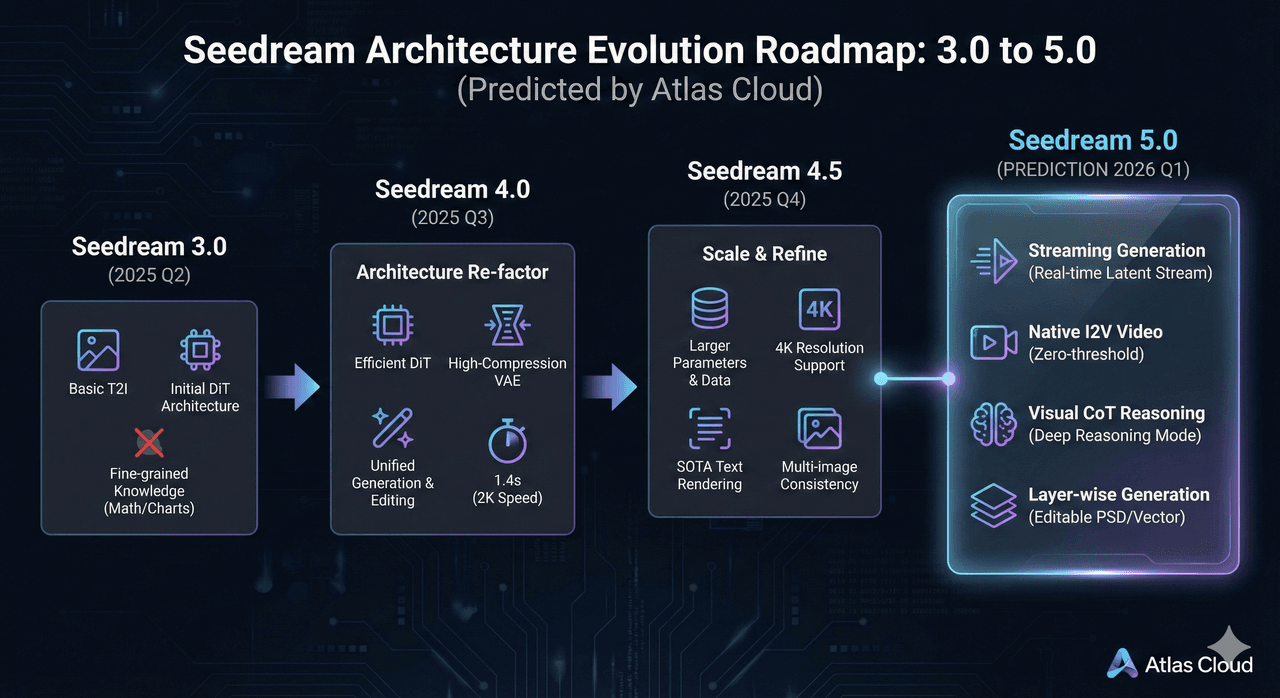

The Evolution Curve: Decoding the Roadmap from 3.0 to 5.0

To predict 5.0, we must first understand the foundation laid by Seedream 4.5. According to the technical documentation, the Seedream series follows a clear evolutionary logic: Efficiency -> Quality -> Multimodality.

- Seedream 3.0 (2025 Q2): Laid the groundwork but lacked fine-grained knowledge capabilities (e.g., mathematical formulas, charts) and struggled with natural image distribution.

- Seedream 4.0 (2025 Q3): Architecture Re-factor. Introduced an efficient DiT (Diffusion Transformer) and high-compression VAE, compressing 2K image generation time to 1.4 seconds. Crucially, it unified Text-to-Image (T2I) and Image Editing into a single training pipeline.

- Seedream 4.5 (2025 Q4): Scale & Refine. Expanded parameters and data scale based on 4.0, achieving support for 4K resolution and SOTA (State of the Art) performance in text rendering and multi-image consistency.

Atlas Cloud Core Insight: Version 4.5 is essentially the "Complete Form" of the 4.0 architecture, designed to clear obstacles in image quality and resolution. Therefore, Seedream 5.0 must inevitably target "Interaction Paradigms" and "Modal Boundaries."

Seedream 5.0 Core Feature Predictions

Based on the "Adversarial Distillation" and "In-Context Reasoning" technical routes mentioned in the documentation, we predict four major updates for 5.0:

1. "Streaming" Generation & Editing

Current State: Seedream 4.0 already achieves 2K generation in 1.4 seconds.

- Prediction: Version 5.0 will likely introduce Real-time Latent Stream technology. Users will see the image change in real-time as they type the prompt. It is no longer a turn-based "Input -> Wait -> Result" process, but a "What You Think Is What You See" fluid interaction.

- Competitor Benchmark: This move will directly challenge the real-time advantage of FLUX.1 Kontext (max), leveraging ByteDance's powerful edge-side inference optimization (such as the 4/8-bit hybrid quantization mentioned in the docs) to achieve millisecond-level response on mobile devices.

2. Zero-Threshold Video Generation

Current State: Seedream 4.0 already possesses powerful "Multi-image Consistency" and "Character Continuity."

- Prediction: 5.0 will no longer distinguish between image and video models. Image-to-Video (I2V) will become a built-in basic feature. Based on the strong consistency of 4.5, after generating a character image, users can directly generate a 3-5 second high-fidelity video via text commands (e.g., "Make him turn around and smile") without switching models.

- Technical Support: The "Joint post-training" strategy mentioned in the documents has likely been extended to temporal data.

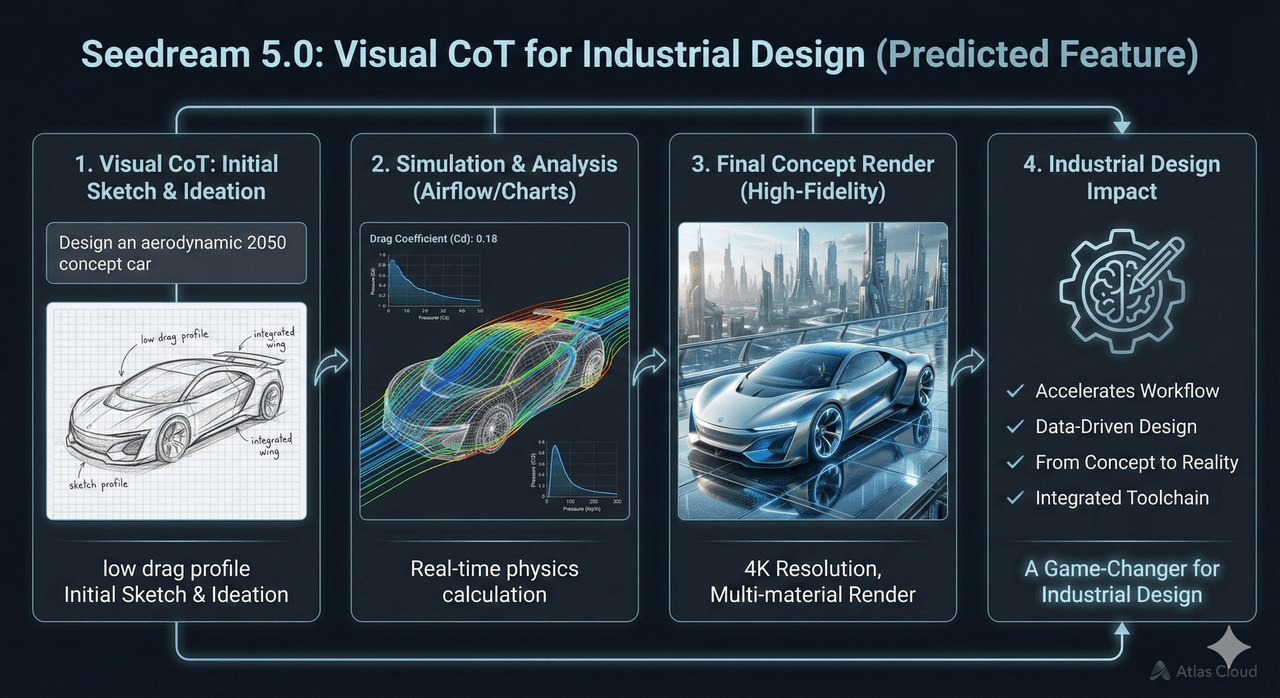

3. Visual Chain-of-Thought (Visual CoT)

Current State: The documents highlight 4.0's "In-Context Reasoning" capabilities, such as puzzle solving and comic continuation.

- Prediction: Targeting Google Nano Banana Pro's flagship "Thinking" mode, Seedream 5.0 will launch a Deep Reasoning Mode.

- Use Case: When you input "Design an aerodynamic 2050 concept car," the model won't just draw a car. It will first generate sketches, then calculate airflow structures (using its powerful formula/chart rendering ability), and finally render the finished product. This will be a killer feature for the industrial design sector.

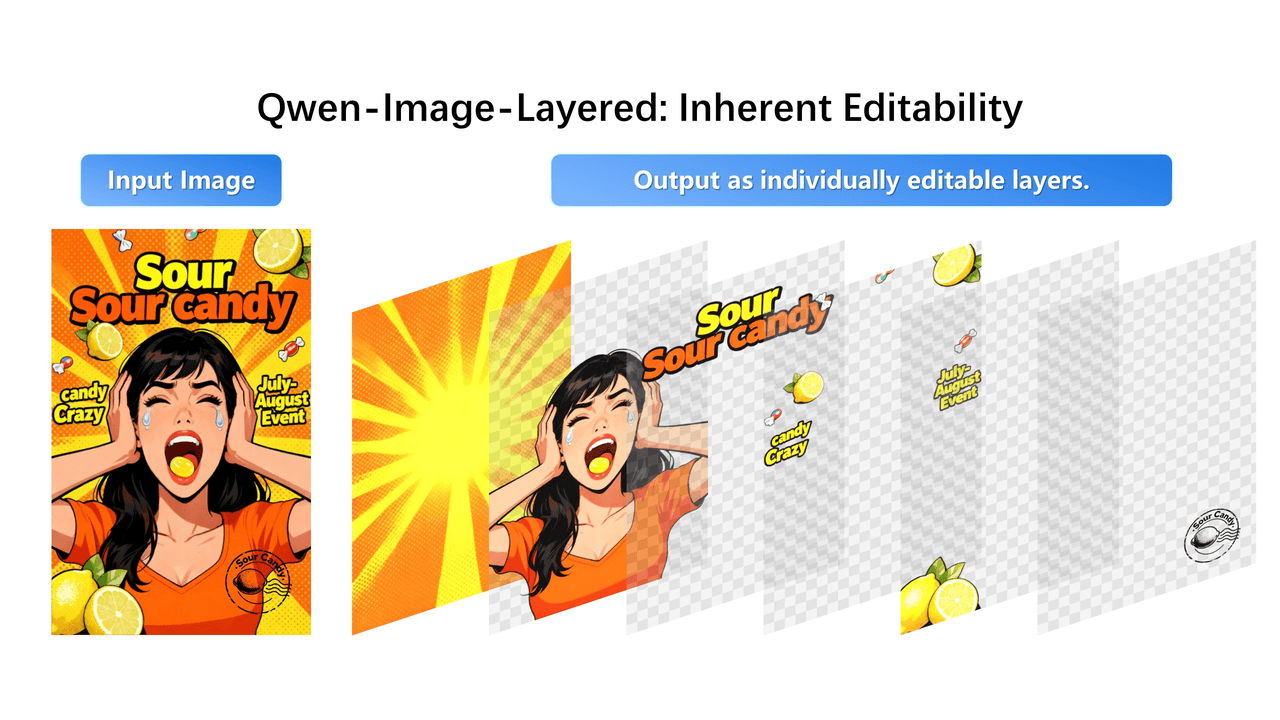

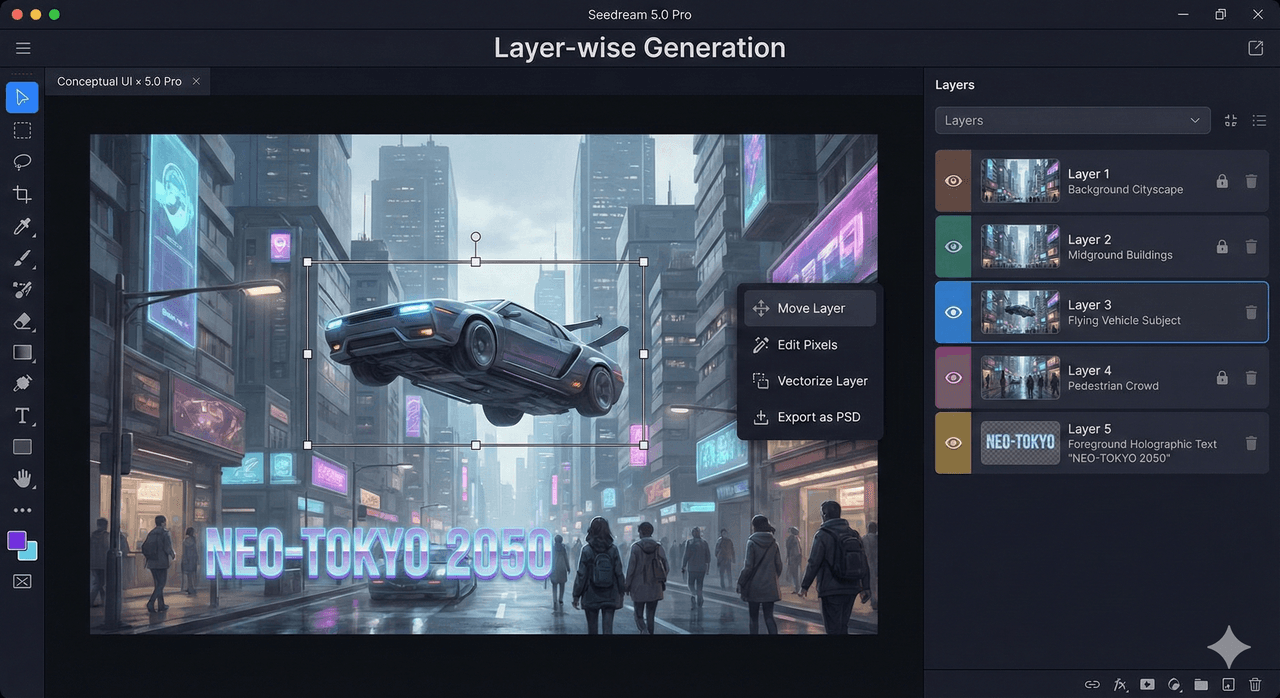

4. Layer-wise UI Rendering: From "Drawing" to "Designing"

Current State: Seedream 4.5 can already handle posters and long text with complex layouts. However, for professional design workflows, we predict a qualitative leap in 5.0.

- Predicted Feature: Layer-wise Generation. The generated images are no longer flat raster pixels but come with a "Layer" concept. Text, background, and subjects are separable, potentially supporting export to SVG/PSD formats.

- Deep Comparison: Seedream 5.0 (Predicted) vs. Qwen-Image (Layered)

- Qwen-Image Logic: The king of "Layout Control." Its strength lies in Grounding, outputting precise Bounding Box coordinates for every element—perfect for front-end automation and code generation.

- Seedream 5.0 Logic: While Qwen represents "Engineer Thinking" (Coordinates & Structure), Seedream 5.0 represents "Designer Thinking" (Rendering & Fusion). Based on "Visual Signal Controllable Generation," we predict Seedream 5.0 will achieve Style-Aware Vectorization.

- Real-World Difference: Ask for a "tech-style button": Qwen gives you a box and coordinates; Seedream 5.0 gives you a transparent PNG layer with glow effects, keeping the text editable—bridging the last mile from Prompt to Figma.

The Showdown: Seedream 5.0 vs. The Competition (2026 Q1)

Combining Atlas Cloud's existing evaluation data, here is our horizontal comparison prediction for mainstream models in Q1 2026:

| Dimension | Seedream 5.0 (Predicted) | Nano Banana Pro (Google) | Qwen-Image (Layered) | GPT-Image-2 (OpenAI) | FLUX.1 Kontext |

| Speed | Millisecond Streaming | ★★★★ | ★★★ | ★★★ | ★★★★★ |

| Logic Reasoning | Visual CoT | ★★★★★ (Native Strength) | ★★★★ | ★★★★ | ★★★ |

| UI/Design | Layered + Vector (Designer's Choice) | Weak | Precise Layout/Coords (Engineer's Choice) | Mid | Weak |

| Chinese Understanding | 🇨🇳 Native Advantage | ★★★★ | ★★★★★ | ★★★ | ★★★ |

| Editability | Native Unified (Seamless) | Strong (Multi-turn) | General | Mid | Weak (Plugin dependent) |

| Core Moat | All-in-One Experience | Multi-modal Window | Layout Grounding | Ecosystem | Open Source |

Atlas Cloud Verdict: If Nano Banana Pro wins on logic and Qwen-Image wins on precise layout, Seedream 5.0's moat is the "All-in-One Integrated Experience." It is not just a drawing tool, but a "Visual Productivity Engine" that understands physical laws and works in layers like a human designer.

Why You Need Atlas Cloud

Seedream 5.0's powerful performance implies higher requirements for computing resources. Technical documents show that Seedream uses Hybrid Sharded Data Parallelism (HSDP) and extreme kernel optimization to achieve such high efficiency.

Atlas Cloud is Fully Prepared:

- Day 0 API Access: We will launch the model and API integration on the very first day of Seedream 5.0's release.

- Enterprise-Level Acceleration: Targeting the "Quantization" and "Speculative Decoding" technologies mentioned in the docs, Atlas Cloud has performed targeted optimizations to significantly reduce inference costs.

Next Step: Want to get test access the moment Seedream 5.0 is released?

👉 [Subscribe to the Atlas Cloud Here]

How to use on Atlas Cloud

Atlas Cloud lets you use models side by side — first in a playground, then via a single API.

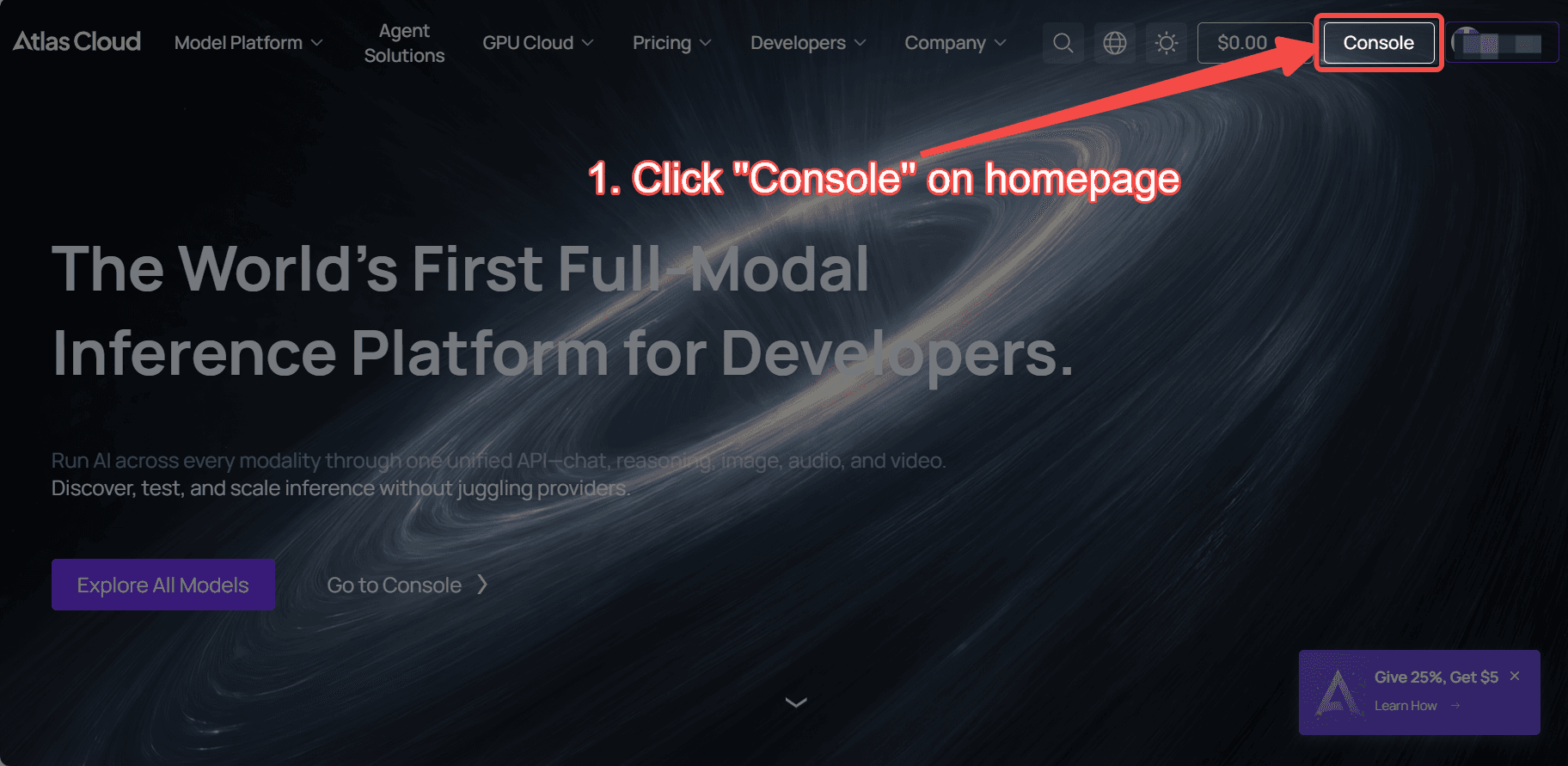

Method 1: Use directly in the Atlas Cloud playground

Method 2: Access via API

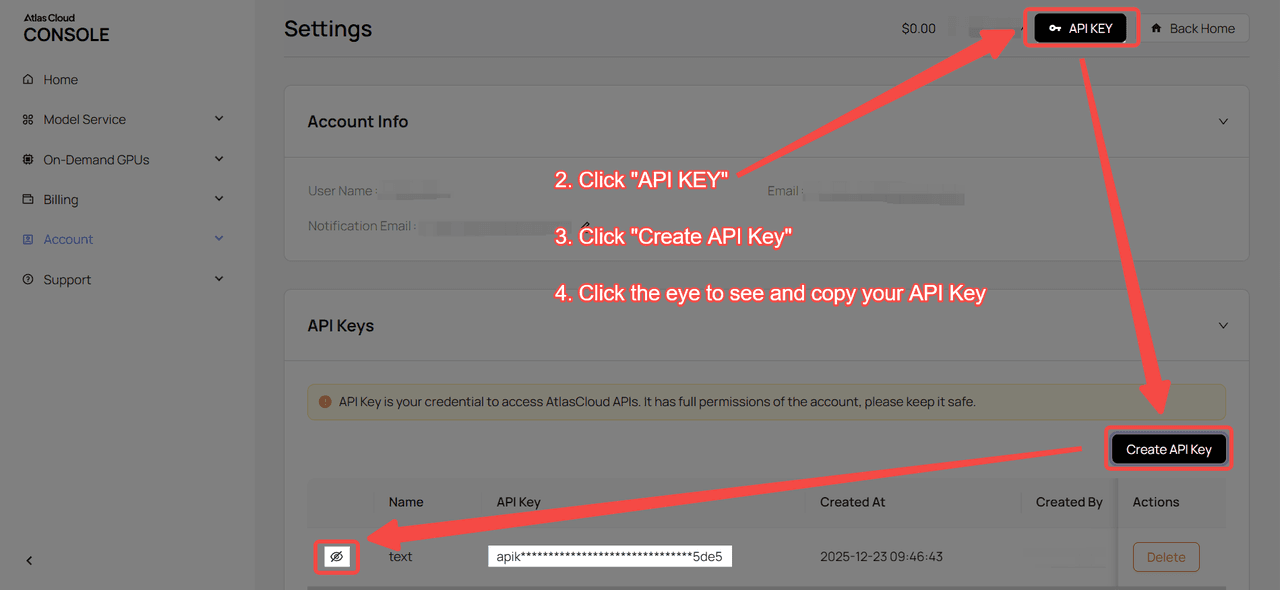

Step 1: Get your API key

Create an API key in your console and copy it for later use.

Step 2: Check the API documentation

Review the endpoint, request parameters, and authentication method in our API docs.

Step 3: Make your first request (Python example)

Example: generate a video with Seedream 4.5:

plaintext1import requests 2import time 3 4# Step 1: Start image generation 5generate_url = "https://api.atlascloud.ai/api/v1/model/generateImage" 6headers = { 7 "Content-Type": "application/json", 8 "Authorization": "Bearer $ATLASCLOUD_API_KEY" 9} 10data = { 11 "model": "bytedance/seedream-v4.5", 12 "enable_base64_output": False, 13 "prompt": "Nighttime urban photoshoot: A young woman stands on a rooftop terrace, leaning against a metal railing while adjusting her headphones. One foot crossed casually, relaxed yet focused. She wears a dark coat over a knit sweater and wide-leg trousers. City neon lights glow behind her with soft bokeh reflections, cinematic framing, vintage film photography style.", 14 "size": "2048*2048" 15} 16 17generate_response = requests.post(generate_url, headers=headers, json=data) 18generate_result = generate_response.json() 19prediction_id = generate_result["data"]["id"] 20 21# Step 2: Poll for result 22poll_url = f"https://api.atlascloud.ai/api/v1/model/prediction/{prediction_id}" 23 24def check_status(): 25 while True: 26 response = requests.get(poll_url, headers={"Authorization": "Bearer $ATLASCLOUD_API_KEY"}) 27 result = response.json() 28 29 if result["data"]["status"] == "completed": 30 print("Generated image:", result["data"]["outputs"][0]) 31 return result["data"]["outputs"][0] 32 elif result["data"]["status"] == "failed": 33 raise Exception(result["data"]["error"] or "Generation failed") 34 else: 35 # Still processing, wait 2 seconds 36 time.sleep(2) 37 38image_url = check_status()