Seedance 1.5 Video Models

Seedance is ByteDance’s family of video generation models, built for speed, realism, and scale. Its AI analyzes motion, setting, and timing to generate matching ambient sounds, then adds creative depth through spatial audio and atmosphere, making each video feel natural, immersive, and story-driven.

Explore the Leading Seedance 1.5 Video Models

Seedance v1 Pro Fast Text-to-video

An efficient text-to-video model geared toward fast, cost-effective generation. Ideal for prototyping short narrative clips (2–12 s) with stylistic flexibility and prompt-faithful motion.

Seedance v1 Pro Fast Image-to-video

Seedance Pro’s image-to-video mode transforms still visuals into cinematic motion, maintaining visual consistency and expressive animation across frames.

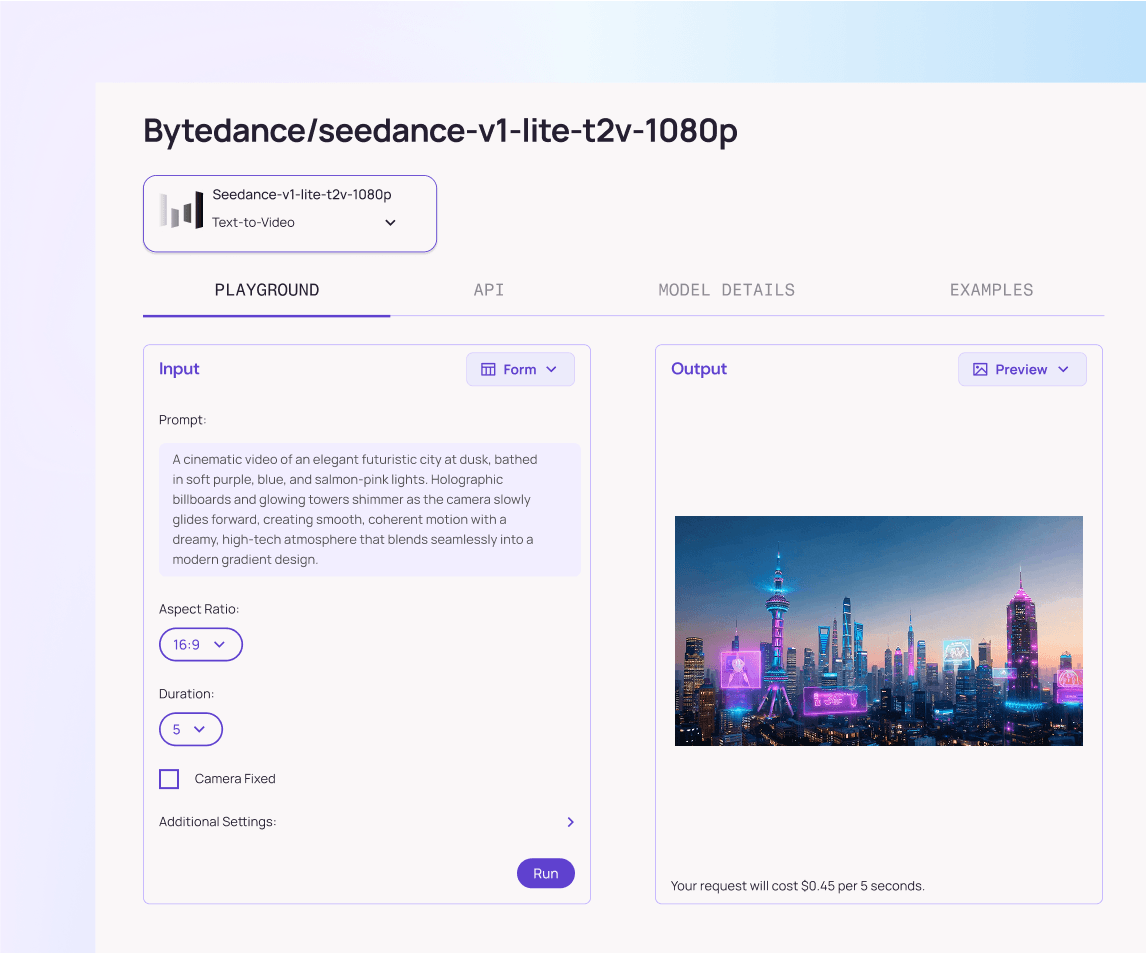

Seedance v1 Lite t2v 1080p

An efficient text-to-video model geared toward fast, cost-effective generation. Ideal for prototyping short narrative clips (5–10 s) with stylistic flexibility and prompt-faithful motion.

Seedance v1 Lite t2v 720p

An efficient text-to-video model geared toward fast, cost-effective generation. Ideal for prototyping short narrative clips (5–10 s) with stylistic flexibility and prompt-faithful motion.

Seedance v1 Lite i2v 1080p

Animate static images into dynamic video with the Lite model. Delivers motion, transitions, and stylistic coherence at lower latency and cost, while preserving source imagery.

Seedance v1 Pro t2v 1080p

A full-fidelity text-to-video model built for cinematic results. Generates multi-shot, 1080p videos with smooth motion, strong prompt adherence, and scene continuity.

Seedance v1 Pro t2v 720p

A full-fidelity text-to-video model built for cinematic results. Generates multi-shot, 1080p videos with smooth motion, strong prompt adherence, and scene continuity.

Seedance v1 Pro t2v 480p

A full-fidelity text-to-video model built for cinematic results. Generates multi-shot, 1080p videos with smooth motion, strong prompt adherence, and scene continuity.

Seedance v1 Pro i2v 720p

Seedance Pro’s image-to-video mode transforms still visuals into cinematic motion, maintaining visual consistency and expressive animation across frames.

Seedance v1 Pro i2v 480p

Seedance Pro’s image-to-video mode transforms still visuals into cinematic motion, maintaining visual consistency and expressive animation across frames.

Seedance v1 Pro i2v 1080p

Seedance Pro’s image-to-video mode transforms still visuals into cinematic motion, maintaining visual consistency and expressive animation across frames.

Seedance v1 Lite t2v 480p

An efficient text-to-video model geared toward fast, cost-effective generation. Ideal for prototyping short narrative clips (5–10 s) with stylistic flexibility and prompt-faithful motion.

Seedance v1 Lite i2v 720p

Animate static images into dynamic video with the Lite model. Delivers motion, transitions, and stylistic coherence at lower latency and cost, while preserving source imagery.

Seedance v1 Lite i2v 480p

Animate static images into dynamic video with the Lite model. Delivers motion, transitions, and stylistic coherence at lower latency and cost, while preserving source imagery.

What Makes Seedance 1.5 Video Models Stand Out

I2V & T2V

Support generating video from text prompts and single images (multi-shot included).

Native Audio Generation

Can generate diverse voices and spatial sound effects that coordinate with the visuals to deliver a smoother storytelling.

Precision Lip-Sync

Support a wide range of languages and dialects with great lip-sync and motion alignment.

Film-Grade Cinematography

Capable of complex camera movement, from close-ups with subtle facial expressions and emotions, to full-shots with cinematic level of details, composition, and atmosphere.

Multi-Resolution

Produce 480p, 720p, or 1080p video to balance quality and performance needs.

Production Ready

Optimized for fast deployment, scaling, and enterprise workloads.

What You Can Do with Seedance 1.5 Video Models

Generate 480p, 720p, or 1080p video from text prompts or static images.

Produce explainer videos, ads, and social content at scale.

Refine videos with direct or sequential editing.

Customize workflows with adjustable speed and fidelity.

Strong Storytelling and Emotional Expression.

Why Use Seedance 1.5 Video Models on Atlas Cloud

Combining the advanced Seedance 1.5 Video Models models with Atlas Cloud's GPU-accelerated platform provides unmatched performance, scalability, and developer experience.

First-person POV from the front seat of a giant steel roller coalster. The coaster crests the peak and plunges straight down into a dark tunnel. Surrounding scenery (an amusement park at sunset) is slightly blurred, while the wind is represented as whistling air particles.

Performance & flexibility

Low Latency:

GPU-optimized inference for real-time reasoning.

Unified API:

Run Seedance 1.5 Video Models, GPT, Gemini, and DeepSeek with one integration.

Transparent Pricing:

Predictable per-token billing with serverless options.

Enterprise & Scale

Developer Experience:

SDKs, analytics, fine-tuning tools, and templates.

Reliability:

99.99% uptime, RBAC, and compliance-ready logging.

Security & Compliance:

SOC 2 Type II, HIPAA alignment, data sovereignty in US.

Explore More Families

Seedance 1.5 Video Models

Seedance is ByteDance’s family of video generation models, built for speed, realism, and scale. Its AI analyzes motion, setting, and timing to generate matching ambient sounds, then adds creative depth through spatial audio and atmosphere, making each video feel natural, immersive, and story-driven.

Moonshot LLM Models

The Moonshot LLM family delivers cutting-edge performance on real-world tasks, combining strong reasoning with ultra-long context to power complex assistants, coding, and analytical workflows, making advanced AI easier to deploy in production products and services.

Wan2.6 Video Models

Wan 2.6 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. Wan 2.6 will let you create videos of up to 15 seconds, ensuring narrative flow and visual integrity. It is perfect for creating YouTube Shorts, Instagram Reels, Facebook clips, and TikTok videos.

Flux.2 Image Models

The Flux.2 Series is a comprehensive family of AI image generation models. Across the lineup, Flux supports text-to-image, image-to-image, reconstruction, contextual reasoning, and high-speed creative workflows.

Nano Banana Image Models

Nano Banana is a fast, lightweight image generation model for playful, vibrant visuals. Optimized for speed and accessibility, it creates high-quality images with smooth shapes, bold colors, and clear compositions—perfect for mascots, stickers, icons, social posts, and fun branding.

Image and Video Tools

Open, advanced large-scale image generative models that power high-fidelity creation and editing with modular APIs, reproducible training, built-in safety guardrails, and elastic, production-grade inference at scale.

Ltx-2 Video Models

LTX-2 is a complete AI creative engine. Built for real production workflows, it delivers synchronized audio and video generation, 4K video at 48 fps, multiple performance modes, and radical efficiency, all with the openness and accessibility of running on consumer-grade GPUs.

Qwen Image Models

Qwen-Image is Alibaba’s open image generation model family. Built on advanced diffusion and Mixture-of-Experts design, it delivers cinematic quality, controllable styles, and efficient scaling, empowering developers and enterprises to create high-fidelity media with ease.

Open AI Model Families

Explore OpenAI’s language and video models on Atlas Cloud: ChatGPT for advanced reasoning and interaction, and Sora-2 for physics-aware video generation.

Hailuo Video Models

MiniMax Hailuo video models deliver text-to-video and image-to-video at native 1080p (Pro) and 768p (Standard), with strong instruction following and realistic, physics-aware motion.

Wan2.5 Video Models

Wan 2.5 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. It delivers realistic motion, natural lighting, and strong prompt alignment across 480p to 1080p outputs—ideal for creative and production-grade workflows.

Sora-2 Video Models

The Sora-2 family from OpenAI is the next-generation video + audio generation model, enabling both text-to-video and image-to-video outputs with synchronized dialogue, sound effect, improved physical realism, and fine-grained control.

Only at Atlas Cloud.