Wan2.5 Video Models

Wan 2.5 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. It delivers realistic motion, natural lighting, and strong prompt alignment across 480p to 1080p outputs—ideal for creative and production-grade workflows.

Erkunden Sie die Führenden Modelle

Wan-2.5 Video Extend Fast

Extend your videos with Alibaba WAN 2.5 video extender model with audio.

Wan-2.5 Video Extend

Extend your videos with Alibaba WAN 2.5 video extender model with audio.

Wan-2.5 Text-to-video Fast

Convert prompts into cinematic video clips with synchronized sound. Wan 2.5 generates 480p/720p/1080p outputs with stable motion, native audio sync, and prompt-faithful visual storytelling.

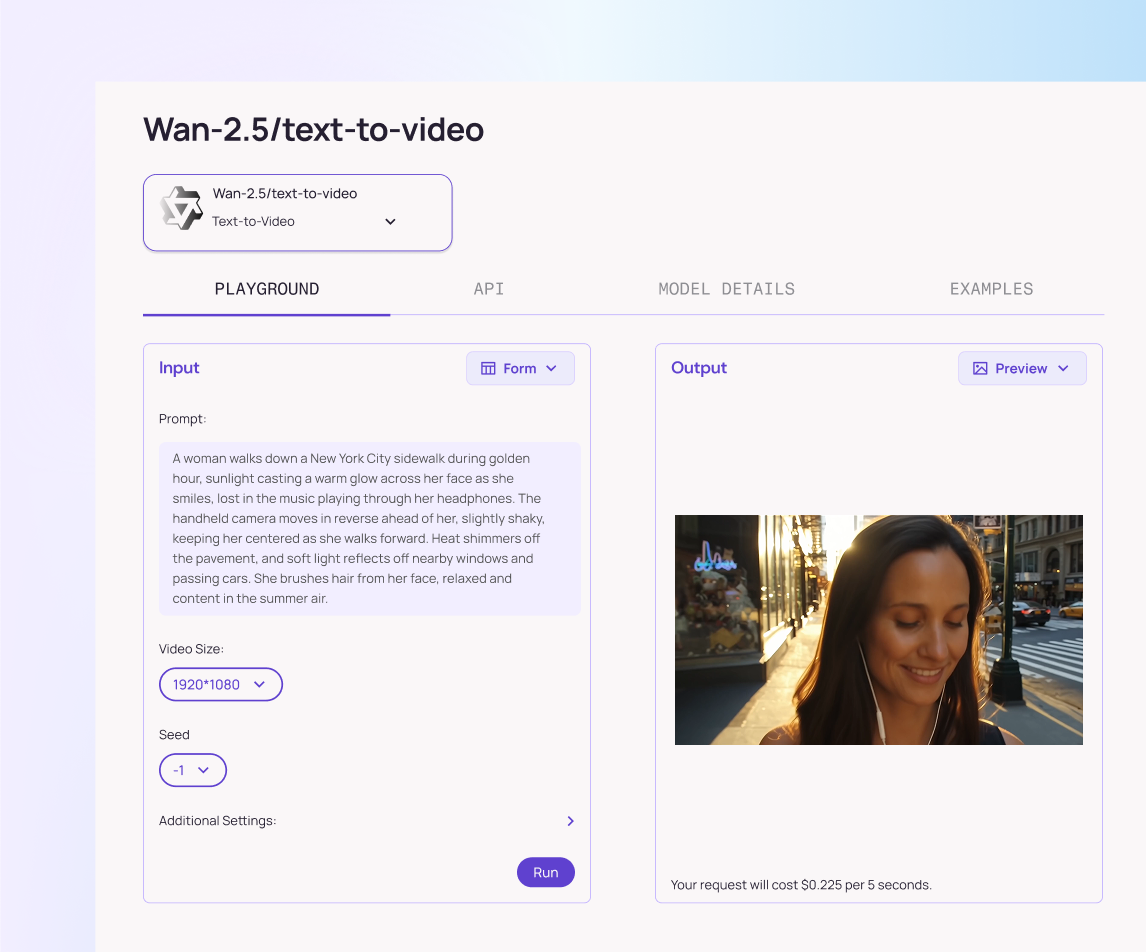

Wan-2.5 Text-to-video

A speed-optimized text-to-video option that prioritizes lower latency while retaining strong visual fidelity. Ideal for iteration, batch generation, and prompt testing.

Wan-2.5 Image-to-video

Bring static images to life with dynamic motion, lighting consistency, and synchronized audio. This variant smoothly animates reference visuals into short video sequences.

Wan-2.5 Image-to-video Fast

Get animated visuals from your images faster without major quality sacrifice. Perfect for preview workflows, previews at scale, or mass production of animated assets.

Wan-2.5 Image Edit

Open and Advanced Large-Scale Image Generative Models.

Wan-2.5 Text-to-image

Generate AI images with Alibaba WAN 2.5 text-to-image model.

Was Heraussticht - Wan2.5 Video Models

Native A/V Sync

Generates perfectly aligned visuals and sound without extra editing.

Unified Multimodal Core

Handles text, image, video, and audio in one seamless model.

Extended Clip Duration

Creates up to 10-second videos for richer storytelling.

Creative Flexibility

Works from text or images to generate or animate content.

Flexible Prompt Inputs

Understands Chinese, English, and other languages natively.

Cinematic Control

Direct camera motion, pacing, and composition right from your prompt.

Was Sie Tun Können - Wan2.5 Video Models

Generate cinematic clips between 5 to 10 seconds length at 480p, 720p, or 1080p resolutions.

Sync visuals and audio in one pass — lip-sync, dialogue, sound effects and background music come together automatically.

Animate any image by turning still visuals into dynamic motion with matching audio cues.

Localize with multilingual prompts: Chinese, English, and more are supported natively.

Preview ideas rapidly with a fast-iteration variant of models to test concepts before full render.

Warum Wan2.5 Video Models auf Atlas Cloud Verwenden

Die Kombination der fortschrittlichen Wan2.5 Video Models-Modelle mit der GPU-beschleunigten Plattform von Atlas Cloud bietet unübertroffene Leistung, Skalierbarkeit und Entwicklererfahrung.

Reality in motion. Powered by Atlas Cloud, Wan 2.5 brings life, light, and emotion into every frame with natural precision.

Leistung & Flexibilität

Niedrige Latenz:

GPU-optimierte Inferenz für Echtzeit-Reasoning.

Einheitliche API:

Führen Sie Wan2.5 Video Models, GPT, Gemini und DeepSeek mit einer Integration aus.

Transparente Preisgestaltung:

Vorhersehbare Token-basierte Abrechnung mit serverlosen Optionen.

Unternehmen & Skalierung

Entwicklererfahrung:

SDKs, Analysen, Fine-Tuning-Tools und Vorlagen.

Zuverlässigkeit:

99,99% Verfügbarkeit, RBAC und compliance-bereite Protokollierung.

Sicherheit & Compliance:

SOC 2 Type II, HIPAA-Ausrichtung, Datensouveränität in den USA.

Weitere Familien Erkunden

Z.ai LLM Models

The Z.ai LLM family pairs strong language understanding and reasoning with efficient inference to keep costs low, offering flexible deployment and tooling that make it easy to customize and scale advanced AI across real-world products.

Seedance 1.5 Video Models

Seedance is ByteDance’s family of video generation models, built for speed, realism, and scale. Its AI analyzes motion, setting, and timing to generate matching ambient sounds, then adds creative depth through spatial audio and atmosphere, making each video feel natural, immersive, and story-driven.

Moonshot LLM Models

The Moonshot LLM family delivers cutting-edge performance on real-world tasks, combining strong reasoning with ultra-long context to power complex assistants, coding, and analytical workflows, making advanced AI easier to deploy in production products and services.

Wan2.6 Video Models

Wan 2.6 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. Wan 2.6 will let you create videos of up to 15 seconds, ensuring narrative flow and visual integrity. It is perfect for creating YouTube Shorts, Instagram Reels, Facebook clips, and TikTok videos.

Flux.2 Image Models

The Flux.2 Series is a comprehensive family of AI image generation models. Across the lineup, Flux supports text-to-image, image-to-image, reconstruction, contextual reasoning, and high-speed creative workflows.

Nano Banana Image Models

Nano Banana is a fast, lightweight image generation model for playful, vibrant visuals. Optimized for speed and accessibility, it creates high-quality images with smooth shapes, bold colors, and clear compositions—perfect for mascots, stickers, icons, social posts, and fun branding.

Image and Video Tools

Open, advanced large-scale image generative models that power high-fidelity creation and editing with modular APIs, reproducible training, built-in safety guardrails, and elastic, production-grade inference at scale.

Ltx-2 Video Models

LTX-2 is a complete AI creative engine. Built for real production workflows, it delivers synchronized audio and video generation, 4K video at 48 fps, multiple performance modes, and radical efficiency, all with the openness and accessibility of running on consumer-grade GPUs.

Qwen Image Models

Qwen-Image is Alibaba’s open image generation model family. Built on advanced diffusion and Mixture-of-Experts design, it delivers cinematic quality, controllable styles, and efficient scaling, empowering developers and enterprises to create high-fidelity media with ease.

Open AI Model Families

Explore OpenAI’s language and video models on Atlas Cloud: ChatGPT for advanced reasoning and interaction, and Sora-2 for physics-aware video generation.

Hailuo Video Models

MiniMax Hailuo video models deliver text-to-video and image-to-video at native 1080p (Pro) and 768p (Standard), with strong instruction following and realistic, physics-aware motion.

Wan2.5 Video Models

Wan 2.5 is Alibaba’s state-of-the-art multimodal video generation model, capable of producing high-fidelity, audio-synchronized videos from text or images. It delivers realistic motion, natural lighting, and strong prompt alignment across 480p to 1080p outputs—ideal for creative and production-grade workflows.

Nur auf Atlas Cloud.